Big Post About Big Context

How large context LLMs could change the status quo

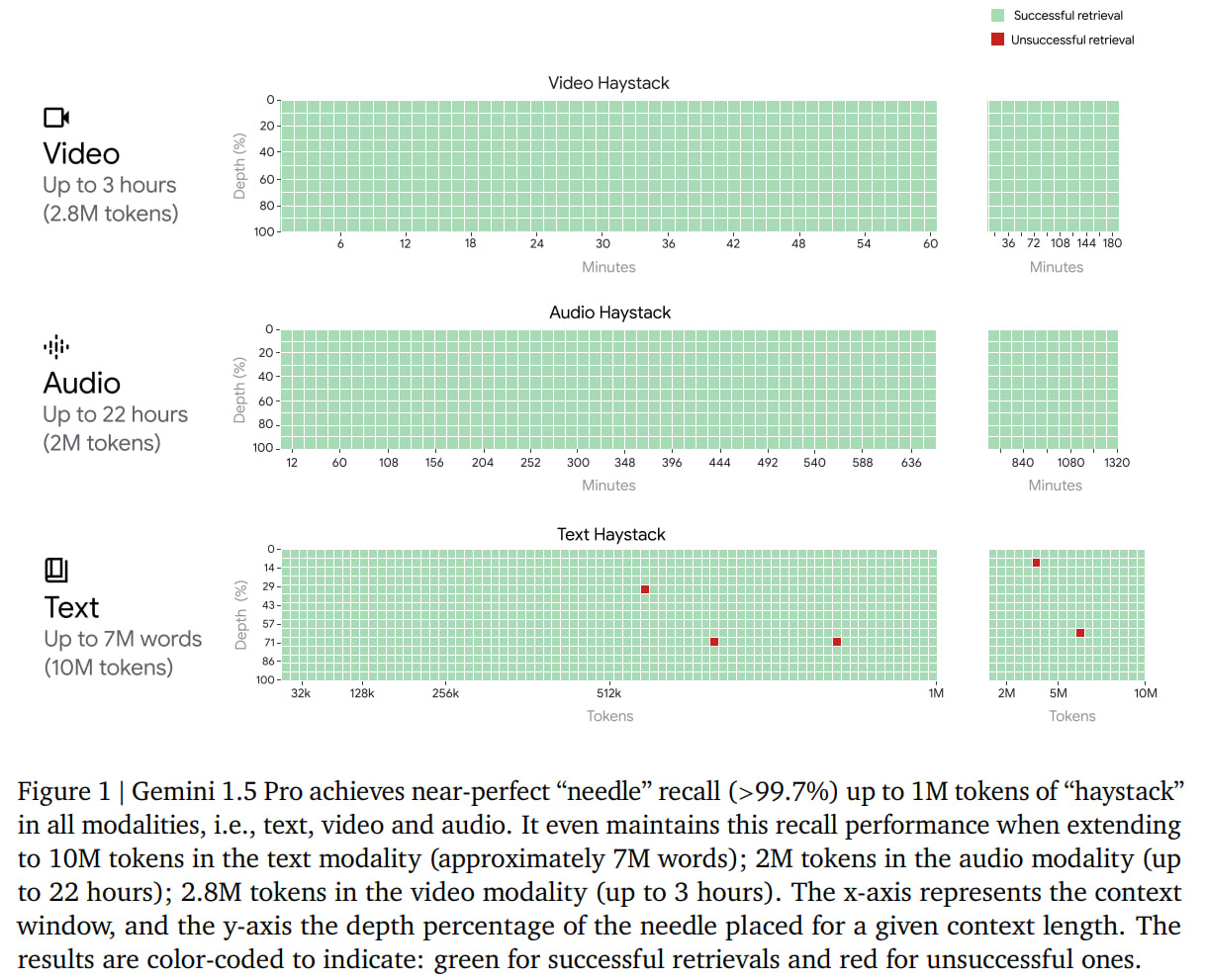

The context size in modern LLMs (that is, the maximum number of tokens they can process at once) is steadily increasing. Initially, moving from two or four thousand tokens to eight thousand seemed like a big achievement. Then came models with up to 32k tokens, but they were limited in availability for a long time. By the time they became widely available, they were already hopelessly outdated because one of the industry leaders, Anthropic, already had models with 100k tokens. Now, the limits of public models range from 128k (GPT-4 Turbo) to 200k (Anthropic). Google was lagging in this race, with its public models covering a maximum of 32k (special versions of PaLM 2 and all versions of Gemini 1.0). A breakthrough appeared with Gemini 1.5, which by default has the now typical 128k, but there's a non-public version with 1M tokens, and a research version with 10M.

An interesting question is how exactly such a large context was achieved, and moreover, how it works efficiently. There are various fresh approaches from different angles, for example, LongRoPE, LongNet with dilated attention, RingAttention, or, say, RMT-R. It's intriguing what exactly Google did.

These new limits will likely significantly change how we work with models. Let's speculate a bit about this near future.

1) First, old techniques like RAG, partly designed to circumvent the limitations of a small context window when working with long documents, should die out. Or at least remain only for special cases, such as the need to pull in fresh or particularly relevant materials.

Tools like langchain's splitters, which mainly cut based on length (considering more suitable cutting points in some cases), were already problematic -- looking at those chopped paragraphs was hard, though somehow it worked.

Even with the ability to properly segment into reasonable pieces, all the different wrappers that match and select more suitable pieces, aggregate results, etc., are needed. Now, potentially, there's no need to bother with this stuff, which is good.

In some cases, of course, it's still necessary and can improve solution quality, but that needs to be evaluated. I generally believe in end-to-end solutions and the eventual displacement of most of these workarounds.

It’s not that I’m against RAG or any other program-controlled LLMs. In fact, I’m a strong proponent of the LLM Programs approach (I wrote on it here and there). My point is that these old-school and dumb RAG approaches are just poor man’s things. They must change.

2) 1M tokens is really a lot; now, you can fit many articles, entire code repositories, or large books into the context. Considering the multimodality and the ability of modern models to also process images, videos, and audio (by converting them into special non-text tokens), you can load hours of video or audio recordings.

Given that models perform well on Needle In A Haystack tests, you can get quite relevant answers when working with such lengths.

It's really possible to find a specific frame in a video:

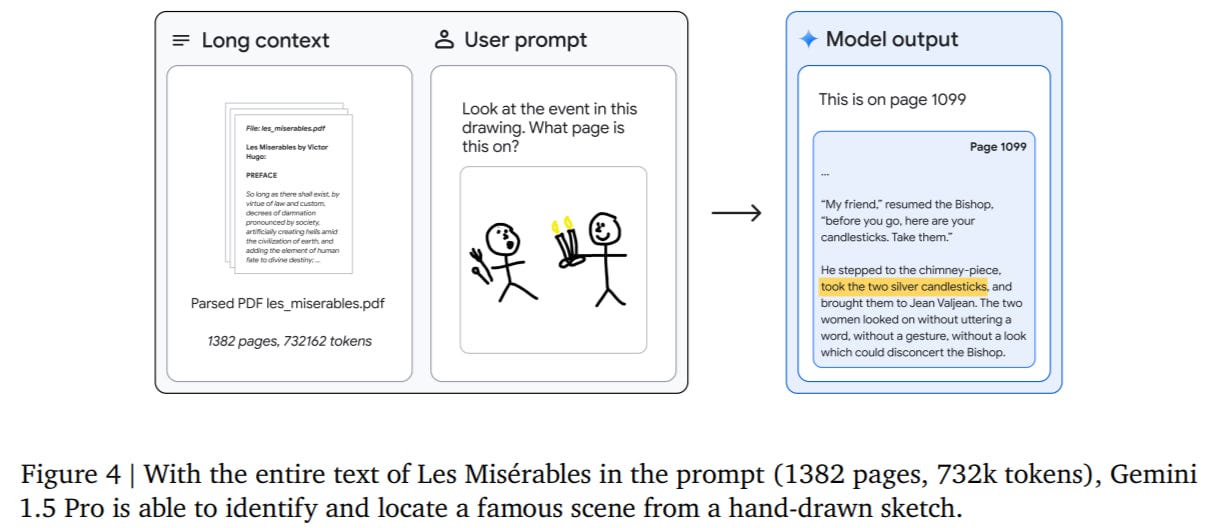

or a moment in a book:

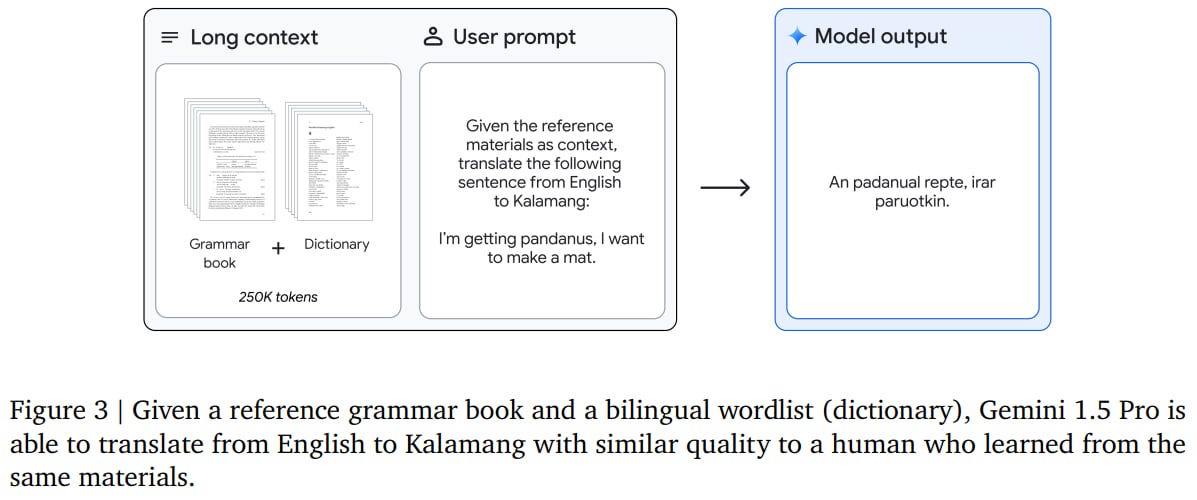

Or solve entirely new classes of problems. For example, cases where models were fed a video of a task solution (like house hunting on Zillow) and asked to generate Selenium code for solving the same task impress me. Or translating to/from the Kalamang language using a large grammar (not parallel sentences!) textbook. Jeff Dean also wrote about this case.

Yes, there's also a dictionary and 400 parallel sentences, but still, in-context language learning is very cool. As are answers to questions about a long document.

Current models like GPT are still purely neural network-based, operating in a stimulus-response mode, without any clear place for System 2-like reasoning. The approaches that exist are mostly quite basic. But right now, various hybrid, including neuro-symbolic, models or models with planning elements are being developed. Hello to the secret Q* or other fresh approaches in these areas. Even in the current mode, in-context learning of a new task from a textbook looks insanely cool (if it works). With full-fledged "System 2-like" capabilities, this could be a game-changer. One of the frontiers lies somewhere here.

3) An interesting question arises regarding the cost of such intelligence. Existing pricing for Gemini 1.0 Pro (0.125$ per 1M symbols) is significantly better than OpenAI's pricing for GPT-4 Turbo (10$/1M tokens), GPT-4 ($30/1M), and the significantly less cool GPT-3.5 Turbo (0.5$/1M). And better than Anthropic's Claude 2.1 ($8/1M). [*] This discussion is about input tokens; output tokens are more expensive, but we usually don't need to generate millions on the output, this is primarily important for tasks with a large input.

If Gemini 1.5 Pro had the same pricing as 1.0, would you be willing to pay ten cents for an answer about a book? Or for generating code to automate a task you recorded on video?

My personal answer to the second question is yes, but to the first -- it depends. If you need to ask dozens of questions, it adds up to a few dollars. For analyzing a legal document or for a one-time book summary, okay, but if you need to do this regularly, it's a question. The economics need to be calculated. Services providing solutions based on such models need to explicitly account for usage to avoid going bankrupt.

4) Regardless of the economics, there must be ways to save and cache results. If you need to ask a bunch of questions about the same set of documents, it's strange to do it from scratch each time. If the prompt structure looks like {large text} + {question}, or {large grammar book} + {sentence to translate}, it would make sense to somehow cache the first part, since it's constant. Technically, within a transformer, these input embeddings calculated by the multi-layer network could be saved somewhere, and for a new question, only calculate for this new addition, saving a lot of resources. But there's no infrastructure for this yet (or I missed it), and even if you deploy the model yourself, you can't do this right away; it requires programming.

I expect something like this to appear both at the API level and infrastructure-wise for caching results of local models. Possibly, some convenient and lightweight integration with a vector database (startup founders, you get the idea).

5) When used correctly, this can significantly increase productivity in many tasks. I personally wouldn't be surprised if some individuals become 10 or 100 times more productive, which is insanely cool. Obviously, this isn't a panacea and won't solve all problems, plus issues with confabulations (a better term than hallucinations). Result verification remains a highly relevant task.

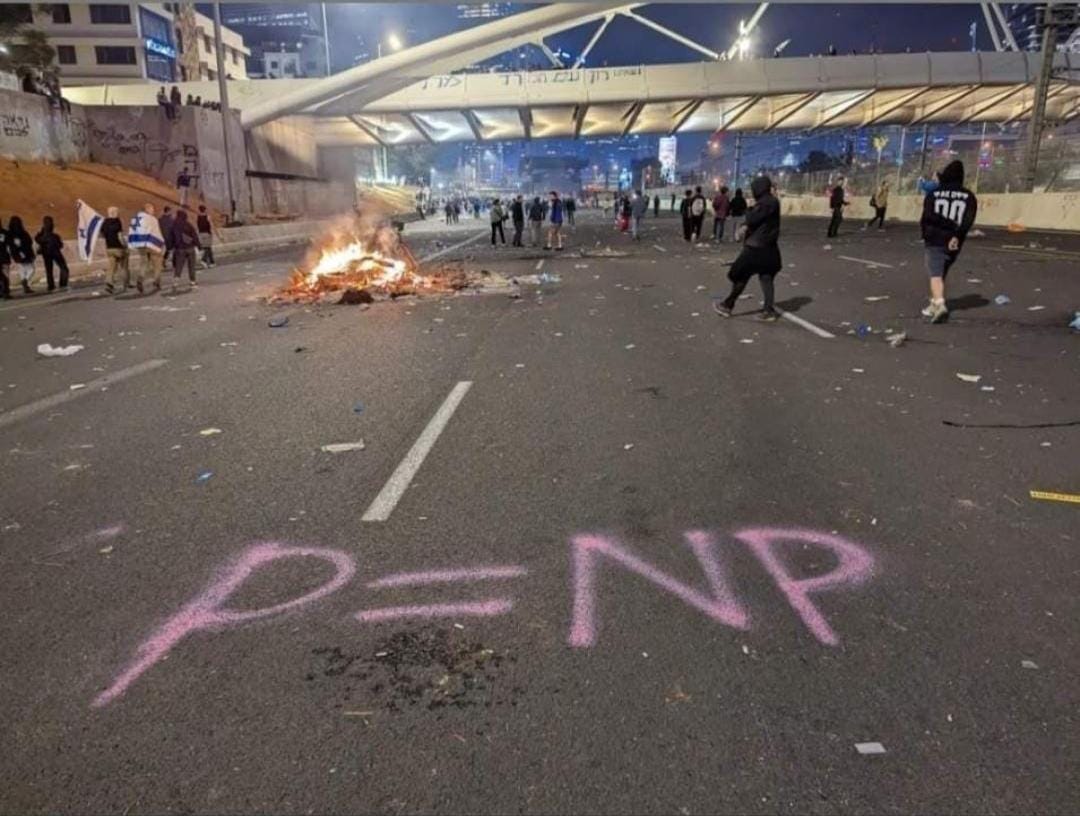

There are likely classes of tasks where verification is much cheaper than solving the task independently (we can jokingly call this class "cognitive NP" tasks), and there are definitely many of them -- writing letters or blog posts clearly falls here. I've long been writing in an English blog through direct translation of the entire text by GPT with subsequent editing, which is significantly faster than writing from scratch myself. I note that errors are comparatively rare, GPT-4 Turbo often produces text that requires no changes at all. Sometimes -- one or two edits. I've never needed to rewrite not just the entire text, but even a single paragraph.

And these are just the surface-level tasks. If we dig deeper, there should be very many. I'm almost certain we'll see Jevons paradox in full force here, with the use of all these models only increasing.

6) A very important and at the same time difficult class of solutions is model output validation. As we can’t always rely on a model, and there is a risk of confabulations (which may sometimes be costly, see the recent case with Air Canada chatbot or an older court case with ChatGPT inventions), we need to lower such risks.

In a similar and narrower field of machine translation (MT) there is a set of solutions called Machine translation quality estimation (MTQE), to estimate risk of bad translations, predict quality scores, detect specific types of errors, etc (the exact objective may vary). They are not perfect, and may not solve your particular task if it differs from the original objective, though they may help. We need something similar for LLMs, say LLMQE.

There will be solutions for which many companies will be willing to pay. There will be many different solutions suited for different cases. But reliably creating such solutions won't be easy. You all get this too.

7) It's really unclear how the work for entry positions (juniors) will change in the near future. And whether there will be any work for them at all. And if not, where the middles and seniors will come from. Not only and not so much in programming, but also in other areas. In content creation, in many tasks, models will surpass them or will be a significantly cheaper and faster alternative. What remains is the technically complex area of content validation -- probably where their activities will shift. But this is not certain. I expect a significant change in the nature of work and the emergence of entirely new tools, which do not yet exist (probably this is already being worked on by the likes of JetBrains).

I don't know how much time OpenAI has until the creation of AGI, when they supposedly need to reconsider their relationship with Microsoft (“Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.“) and generally decide how to properly monetize it. But even without that, they and Google are already acting as sellers of intelligence by the pound. It's unclear what will happen to the world next, but as some countries surged ahead of others during the industrial revolution, the same will happen here, but even faster.

What a time to be alive!

Changelog

02/03/2024 — added some clarifications based on questions and discussions at HackerNews. Mostly in pp. 1 (LLM Programs) and 6 (MTQE).