Large Language Model Programs

A useful conceptualization for a wide set of practices for working with LLMs

Authors: Imanol Schlag, Sainbayar Sukhbaatar, Asli Celikyilmaz, Wen-tau Yih, Jason Weston, Jürgen Schmidhuber, Xian Li

Paper: https://arxiv.org/abs/2305.05364

A fascinating study caught my attention, primarily because of its association with Sainbayar Sukhbaatar, but seeing Jürgen Schmidhuber as a co-author made it even more intriguing.

Solving tasks with LLMs

Language Language Models (LLMs) are steadily permeating our lives and being adopted for various tasks. There are traditionally two primary directions in customizing LLMs, each with its advantages and drawbacks.

First, there's the fine-tuning (or further training) of a pre-trained base model. This process demands significant computational resources, although not as much as initial training. It also requires gathering data in a reasonable quantity (usually thousands and above) and some infrastructure for conducting this training and subsequently hosting the fine-tuned models. However, for complex algorithmic tasks, it's challenging to ensure the model effectively learns the algorithm.

Another beloved approach is in-context learning, a domain cherished by prompt engineers. Here, desired results are achieved by crafting the right prompts with task solution examples and accompanying techniques like Chain-of-Thought (CoT). Challenges in this realm mainly stem from the limited text size you can feed into the model. Current trends are moving towards enlarging context sizes, as seen with recent developments like Unlimiformer and the latest announcement from Anthropic about their Claude version supporting a 100k context. Even GPT-4 has a model that accepts a context size of 32k. There are also subtle challenges like the potential interference between steps in a complex, multi-stage prompt, which may distract the model from focusing on the current essential task.

The LLM Programs framework

The authors introduce an alternative method named LLM Programs, which can be seen as an evolution of in-context learning. Here, the LLM is embedded into a program (in conventional programming languages like Python), and this external code handles state maintenance and guides the model through steps.

The beauty of this approach is that programming languages are ideally suited for this task. If we've already structured a sequence of problem-solving steps (a task typically done in prompt engineering and often in fine-tuning where understanding the problem well enough to generate examples is crucial), defining them through external code might be simpler. As a bonus, the accessible context size increases, and steps don't interfere with each other since the context isn't cluttered with data unrelated to other algorithmic steps. Each step's prompt becomes strictly specific to that phase.

The key to solving tasks via LLM Program is the ability to decompose a problem solution into a sequence of simpler steps. It's clear that this might not be feasible for all tasks. The output of each step needs to be parsed, essential parts of the program state gathered in this high-level code, and new prompts generated for subsequent steps. This approach differs from previous works where the model used external tools like calculators or code interpreters (e.g., Toolformer and LaMDA). In those instances, the LLM was responsible for managing the state, but in the LLM Programs method, the roles are reversed.

What's so great about this approach? First, it requires little or no fine-tuning. Secondly, it allows you to describe a complex and extensive task, which might be challenging to describe through prompts or to train. Along the way, there are more precise specifications for input and output data for various program steps. This makes testing, debugging, and evaluating quality easier. Interpretability is also higher. Moreover, implementing security measures becomes simpler. And there's no interference between steps, making it easier for the LLM to operate and for engineers to work with prompts.

Creating a Question-Answer System using LLM

The article then delves into an example of building a question-answer system based on LLM. There are already countless such systems out there, and many existed before LLMs. How do current solutions address questions, for instance, about a corporate website?

Websites often undergo updates, so a static model isn't viable – it would quickly become outdated and fail to address questions about new products. Continually retraining the model after every website update seems unrealistic, expensive, time-consuming, and tedious. Typically, websites get indexed, and the pages are stored in some database, often vector-based. (On this note, I'd like to recommend my friend Ash’s super-fast library called USearch, which allows you to quickly set up your own vector engine. There's also a post by Ash explaining things deeper). Then, based on user queries, relevant documents are retrieved and sent as context to LLM, which then answers the question with the provided context (assuming everything fits within that context). This is called Retrieval Augmented Generation, or RAG.

In such a paradigm, LLM Program naturally solves the problem. An added bonus is the capability to implement more intricate multi-step logic that wouldn't fit entirely within a context.

The tests were conducted on the StrategyQA dataset, which contains binary classification tasks that require multi-step reasoning. An example question is, "Does sunlight penetrate the deepest part of the Black Sea?". To answer, you'd need to find its maximum depth (2km) and information about sunlight penetrating only up to 1km deep in water, and then make a conclusion. Another example question highlighted in the dataset's paper title is, "Did Aristotle use a laptop?". One could pose a question where the reasoning sequence is explicit, like, "Was Aristotle alive when the laptop was invented?". However, the dataset focuses on questions where the sequence is implicitly assumed. The dataset comprises 2,780 questions, of which only 918 have paragraphs with evidence supporting all reasoning steps. The current study is limited to this subset, otherwise we’re relying on the LLM's prior knowledge from pre-training.

The LLM used was OPT-175B. By default, it isn't adept at following instructions, as it hasn't undergone fine-tuning for instructional or conversational data.

To solve the task of evidence-supported question-answering, it's divided into two stages: data filtering and tree search.

Evidence filtering

In the evidence filtering phase, given a question, we scan through all paragraphs to select the most relevant ones. For example, by using a few-shot prompt, we ask LLM to respond (yes/no) on whether a particular paragraph is relevant to the given question. This was tested on a subset of 300 from StrategyQA, where each question was paired with either a relevant or irrelevant paragraph, in a 50/50 ratio. Models like OPT-175B and text-davinci-002 performed slightly better than a random baseline, achieving up to 56%. A more advanced model, 11B Tk-Instruct, fared slightly better, scoring 61.6%.

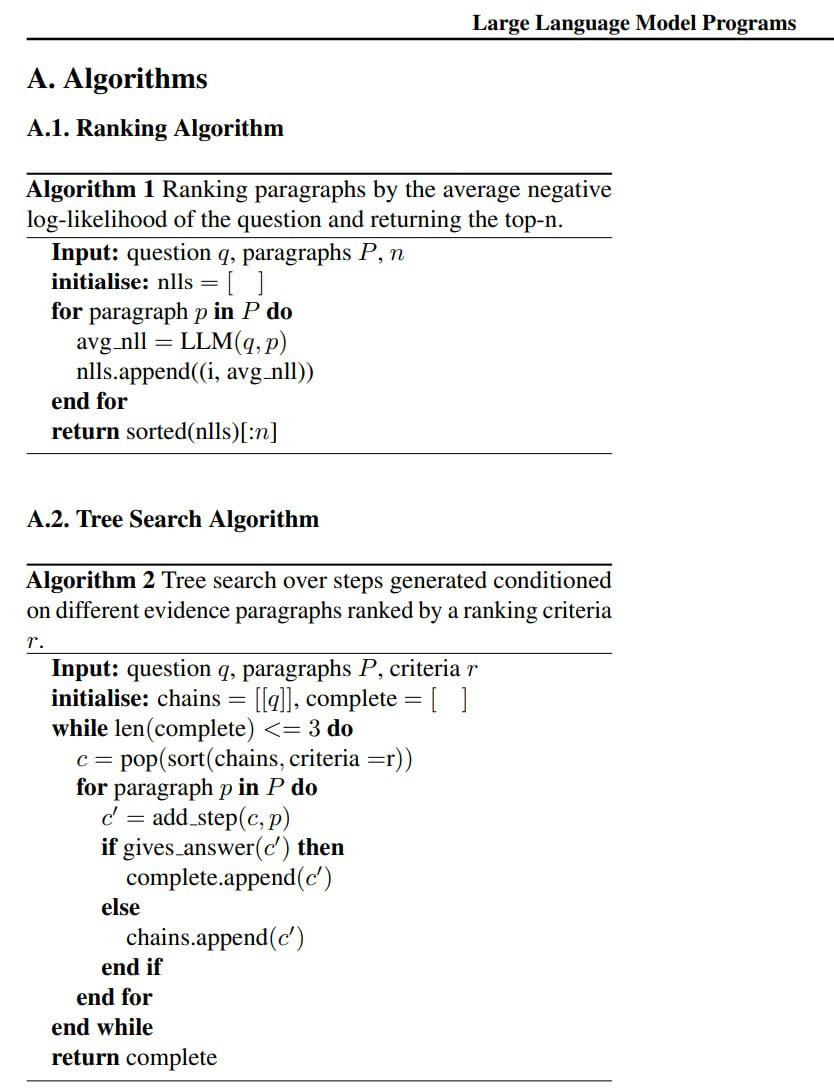

Due to the low effectiveness of this approach, an alternative method was proposed. This method calculates the average negative log-likelihood (NLL) of a question combined with the preceding paragraph and then ranks the results. It was evaluated on a dataset where for every question, there were 100 paragraphs, and only one of them was relevant (meaning random guessing would achieve 1%). This resulted in top-1 accuracy of 79% and top-5 accuracy of 93%. Typically, this is not always exposed in APIs, so direct access to the model is required for such computation.

Tree Search

Next comes the evidence-supported chain of thought. This is carried out through a tree search, where the root is the question, and each level of the tree contains a set of paragraphs with potential evidences. These are used as context for generating the next step. Each tree path represents a (possibly incomplete) reasoning chain. Computing outcomes for all possible chains is unrealistic, so all existing chains are ranked, and the highest-ranked chain is expanded upon. This method is akin to a variation of beam search. The process stops once an answer is produced or after reaching a maximum number of allowed steps.

Two ranking strategies were tested for the tree-based search phase. The first strategy ranks based on the average NLL of the entire chain (where the average NLL for each step is conditioned on its respective paragraph). While it generally works well, it sometimes leads to non-relevant chains. The second strategy examines the average difference in NLL with and without the paragraph (∆P) and with and without the question (∆Q). A combination of these two factors seems to work effectively.

On the available 918 questions from StrategyQA, this approach significantly improved the quality of answers compared to the CoT baseline (~60%). Both search variants resulted in around 66% accuracy (with the delta strategy slightly outperforming). If we input golden facts (i.e., those paragraphs in the dataset marked as containing necessary information), the accuracy reaches around 81%. This seems to be the upper boundary for OPT.

Final thoughts

What an intriguing and, in its own way, very logical approach! It's not new by any means; some works implicitly utilize it, especially when different prompts are used for different steps of an algorithm (like in RLAIF or in Tree-of-Thought approaches). The study lists a significant number of other works that employ a conceptually similar approach. The bibliography in the work is commendable; I discovered many interesting studies from it that I had previously missed.

I wonder when the first programming language with LLM as a first-class citizen will emerge? Darklang seems to be heading in that direction, albeit via a different route. Maybe JetBrains is already working on New Kotlin? 🙂

By the way, I asked ChatGPT, GPT-4, and Bard about possible names for such a language, suggesting that it should reference an existing island's name. Both OpenAI models produced lackluster results. GPT-4 kept trying to come up with names like BabelIsle, LexicoBay, or AINLUArchipelago. I only got a decent suggestion from ChatGPT after some persuasion and prompt tweaking, which was 'Lantau'. Bard, on the other hand, immediately suggested relevant names like Crete, Oahu, or Trinidad. I get the feeling that Bard has improved, while GPT-4 has regressed.