Mindstorms in Natural Language-Based Societies of Mind

Authors: Mingchen Zhuge, Haozhe Liu, Francesco Faccio, Dylan R. Ashley, Róbert Csordás, Anand Gopalakrishnan, Abdullah Hamdi, Hasan Abed Al Kader Hammoud, Vincent Herrmann, Kazuki Irie, Louis Kirsch, Bing Li, Guohao Li, Shuming Liu, Jinjie Mai, Piotr Piękos, Aditya Ramesh, Imanol Schlag, Weimin Shi, Aleksandar Stanić, Wenyi Wang, Yuhui Wang, Mengmeng Xu, Deng-Ping Fan, Bernard Ghanem, Jürgen Schmidhuber

Paper: https://arxiv.org/abs/2305.17066

Today's Sunday long-read is for those who like it a bit meatier! And for fans of Schmidhuber.

Society of Minds (SOMs)

The work references Marvin Minsky's "society of mind" (SOM, not to be confused with Self-organizing Maps) which is a model of the mind composed of numerous interacting agents (see the video).

This work is also somewhat reminiscent of another recent fascinating paper on Generative agents that I truly admire.

Generative Agents: Interactive Simulacra of Human Behavior

Authors: Joon Sung Park, Joseph C. O'Brien, Carrie J. Cai, Meredith Ringel Morris, Percy Liang, Michael S. Bernstein Paper: https://arxiv.org/abs/2304.03442 Code: https://github.com/joonspk-research/generative_agents A very interesting paper from Stanford and Google.

In the world of neural networks, many concepts can be interpreted as SOM (Schmidhuber even sees RNNs as SOM) because there are numerous networks constructed from other networks, like GANs, for instance. However, these so-called "old SOMs" have rigid, fixed interfaces tailored to specific tasks. Over the past decade, there's been a movement towards more flexible interfaces. Schmidhuber discussed topics like recurrent world models and "learning to think" (source) and the ONE BIG NET (source). In this less rigid communication, one network could query other networks through vector interfaces. This evolution leads to multimodal neural communities made up of many networks that interview one another.

Enter Natural-language SOMs (NLSOMs)

To solve problems, different SOM modules can communicate amongst themselves and engage in what's termed a "mindstorm" - a process involving several rounds of communication. The authors were inspired by the success of various communication forms in human societies, like brainstorming. With the rise of Large Language Models (LLMs), it's now possible to assemble SOMs with individual modules as pretrained LLMs and a symbolic interface between them. This means communication in natural language instead of exchanging tensors. These are referred to as natural-language SOMs (NLSOMs).

The language interface has distinct advantages:

Modularity and scalability: Modules can be replaced or added without changing the interface between them.

Explainability: Humans can better understand what the SOM is "thinking". Moreover, people can be incorporated into NLSOMs (and with technologies like Neuralink, probably any SOM).

Human-Biased AI: The biases ingrained in language may likely lean towards more human-like reasoning.

The research opens a wide field for the future: identifying which community structures are more effective for specific tasks. When is a neural monarchy with an NN King more apt, and when is a neural democracy preferable? How can agents form groups with shared expertise and interests? How can neural economies, where networks pay each other for services, be applied in RL with NLSOMs? We eagerly await further work on these topics.

NLSOM is defined by a combination of agents (each with its own objective) and an organizational structure that determines how these agents interact and collaborate. These agents can perceive, process, and transmit both uni-modal and multi-modal information. They might handle different types of data, such as text, sound, or images. Some agents can even be physically embodied and operate in the real world. This can be seen as an evolution of the LLM Programs paradigm, marking its next stage as LLM Programs 2.0 or Multi-agent LLM Programs.

Experiments

The NLSOM framework has been applied to various tasks:

1) Visual Question Answering: Given an image, the system answers a set of textual questions about it (multiple choice).

NLSOM consists of five agents (pre-trained networks). Two, named the organizer and leader, are based on text-davinci-003. The other three are visual-language models (VLMs): BLIP2, OFA, and mPLUG.

The organizer receives a question and generates a sub-question. All VLMs respond to it and send their answers back to the organizer. Based on these, the organizer generates the next sub-question. After a predefined number of rounds, the leader asks the organizer to summarize the conversation. Finally, the leader reads the summary and provides an answer to the original question. This hierarchical structure can be seen as monarchical. They also tried a democratic setup where agents could see other agents' answers and vote on them.

The monarchical LNSOM outperformed other individual models in the A-OKVQA benchmark and also its democratic counterpart. Increasing the number of VLMs from 1 to 3 consistently improved performance, though this might be due to the inherent quality of the VLMs in understanding language.

2) Image Captioning: Generate descriptions for images, especially complex ones requiring detailed explanations.

Using the same setup—2 LLMs + 3 VLMs—they shifted from VQA prompts to captioning prompts. They evaluated their performance on the TARA dataset, and after ten rounds of mindstorm, LNSOM performed better than BLIP2.

3) Prompt Generation for Text-to-Image Synthesis: Enhance the prompt for DALL-E 2 initially set by a human.

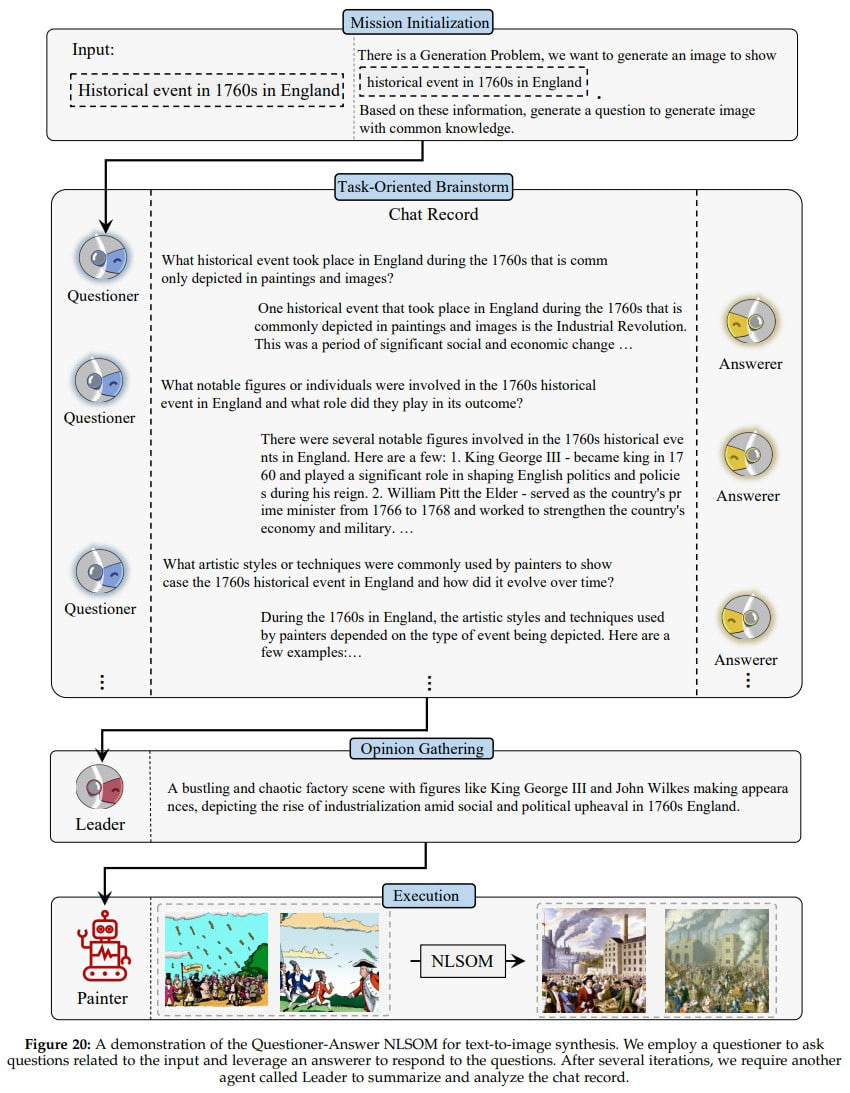

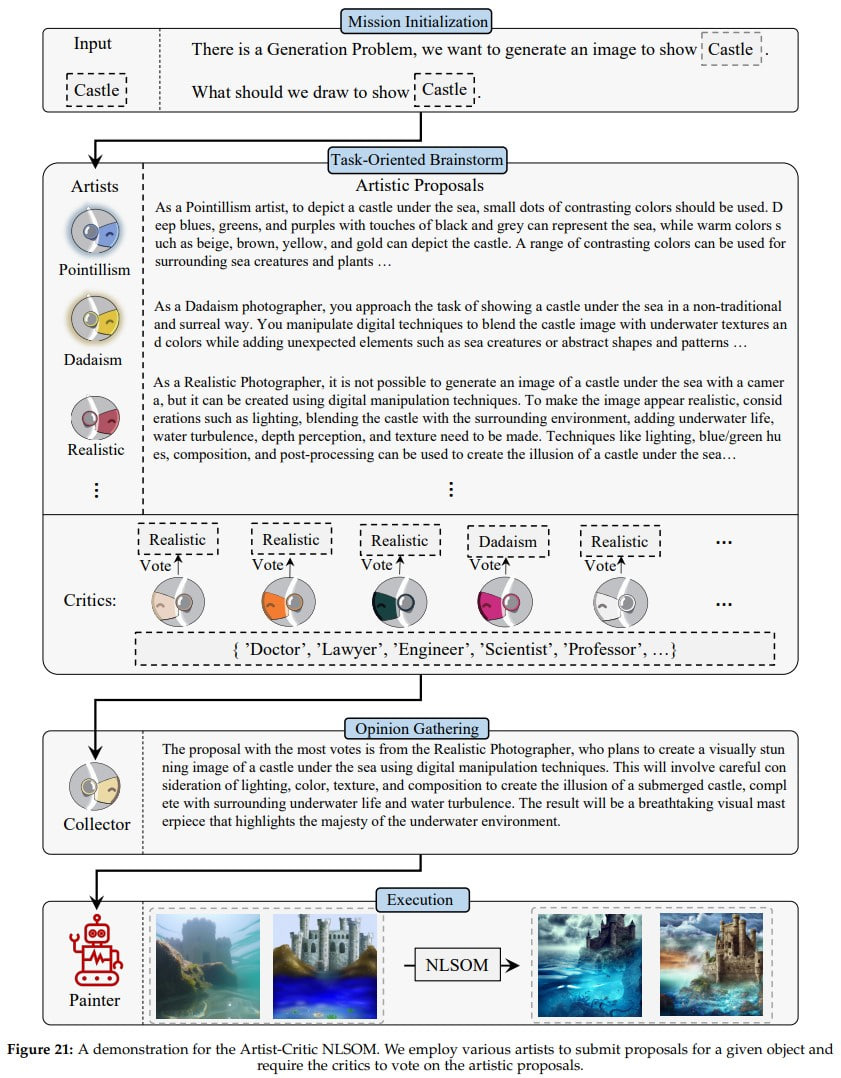

Here, the configuration involves 26 artists, 50 critics, and 1 collector. Each artist comprises 3 LLMs: a questioner, an answerer, and a leader. All these models are based on GPT3.5-turbo. Additionally, there's a painter model (DALL-E 2). Each answerer is fine-tuned for one specific drawing style (out of 26). After several rounds of mindstorm, the questioner receives refined prompts for drawing. Each leader collects this communication and generates a more detailed prompt with a specific style. These prompts are then evaluated by the critics, who each assume a unique profession (e.g."You are a lawyer").

The critics vote on the prompts, and the collector aggregates all votes to determine the winning prompt sent to the painter. The end result is a hierarchical NSLOM structure, incorporating a lower-level Questioner-Answerer NLSOM for artists and an upper-level Artist-Critic NLSOM. In total, it uses 128 LLMs + 1 vision expert.

The results are impressive. A couple of demonstations are below:

4) 3D Generation: Generate a 3D model from a textual description.

The NLSOM includes a 3D designer (Luma AI’s Imagine3D), an LLM leader (GPT3.5-turbo), and three critics (BLIP2). The designer creates an initial version of the model based on a prompt, critics view 2D renders of the model and provide feedback, and the leader then modifies the prompt based on this feedback.

They evaluate the model using a set of prompts, measuring the average Clip score across various views of the resulting model. Not much change is observed after two rounds of interaction, but even this basic mindstorm demonstrates promising results.

5) Egocentric Retrieval: Parsing first-person videos and searching for a specifically defined video segment (like finding the segment in a video with a chef preparing spaghetti where it's visible how much salt was added).

In NLSOM, there are five agents: four debaters and one editor, all based on GPT3.5-turbo. A human externally provides the video description. Each debater gets a portion of the scene, then they discuss among themselves how to answer the question. After several rounds of discussions, the editor intervenes, and generates a summary of the discussion, which forms the answer to the question. This is a monarchic structure; in a democratic version, the debaters vote and decide by themselves.

Tests were conducted on a part of the Ego4D dataset.

The results were significantly better than using a single agent (which didn't beat a random baseline). In this specific task and configuration, democracy prevailed.

6) Embodied AI: Among the tasks are a robot exploring an unknown environment and then answering questions based on its findings (embodied question answering).

Here, there are three agents: a captain (LLM) who controls the robot; an observer (VLM) who answers questions about images from observations; and a first mate (LLM) who queries the VLM and reports to the captain. The agents are built on BLIP2 and GPT3.5-turbo.

They were tested on one of the Habitat datasets (by the way, the third version of this simulator was recently released) with 3D home interiors.

NLSOM explored the environment better than uniform random, covering more of the environment.

7) General Language-based Task Solving: Proposing solutions for any given linguistic task. A very open-ended framework.

The KAUST’s CAMEL framework (link) was used, creating three agents (all based on GPT3.5-turbo). One agent specifies the task based on a user prompt. The other two agents perform roles set by the user. Agents can collaborate and solve given tasks. For example, a "Python Programmer" and "Game Developer" agents together create a dice game.

—

In short, the results are impressive. I believe the future is somewhere there. You can't go far with just prompts; for many serious tasks, external orchestration and working within the LLM Programs paradigm are needed. This introduces a new dimension when a single agent is no longer enough, and there can be many tasks set up like this. If desired, this can be viewed as a Chain-of-Thought, but not within one model, rather among different ones. Or you can view it as ensemble modeling but with richer communication protocols. At the very least, in this paradigm, any case that requires evaluating results within a procedure can be set up, and a critic could well be one of the agents. This concept ideally aligns with the actor model and languages like Erlang/Elixir. It would be interesting if a DSL or an analog of OTP was developed on their base.

Economy of Minds (EOM)

An intriguing matter to discuss is the "credit assignment" for individual modules in NLSOM within the Reinforcement Learning paradigm. Moreover, the broader agent economies. The conventional approach uses policy gradients for LSTM, which trains NLSOM participants. However, Schmidhuber proposes other methods, like the vintage "Neural Bucket Brigade (NBB)" mechanism (source), where competing neurons pay a "weight substance" to the neurons activating them. Interestingly, this method seems to be an evolution of an even older "Bucket Brigade" (source) by John Holland, who contributed significantly to genetic algorithms (a topic dear to my heart, and also the topic of my PhD a long time ago).

Further, when discussing NLSOM, they communicate using human language. Hence, their rewards can also be translated into human-understandable forms, perhaps as tangible as money.

The excitement doesn't stop here!

Certain NLSOM members can interact with their environment, and in return, the environment rewards them with money (let it be USD). Let's say a specific NLSOM member, M, starts with a certain amount of USD. However, M must pay rent, taxes, and bills within the NLSOM and to other relevant players in the environment. If M goes bankrupt, they're removed from NLSOM. This entire scenario can be termed as the "Economy of Minds (EOM)". M can pay other NLSOM members for services. Another member, N, might accept the offer, serve M, and get paid. But the contract between M and N should be valid and enforceable (say, in line with the EU laws). A legal authority is required to validate such a contract. For instance, this could be an LLM that has passed the legal bar exam. This LLM would also mediate in case of disputes. Moreover, affluent NLSOM members might produce offspring (either as copies or modifications) and pass on some of their wealth.

LLM-based EOMs can merge with other EOMs or even integrate into real human economies and marketplaces. Various EOMs (and NLSOMs in general) might intersect partially: an agent could be a member of multiple groups. EOMs can collaborate or compete like corporations, serving different clients. There must be guidelines to avoid conflicts of interest (e.g., one EOM shouldn't spy on another). Overall, human societies can significantly inspire further exploration of this subject.

I'll conclude with a quote from the research:

“Just like current LLMs consist of millions of neurons connected through connections with real-valued weights, future AIs may consist of millions of NLSOMs connected through natural language, distributed across the planet, with dynamically changing affiliations, just like human employees may move from one company to another under certain conditions, in the interest of the greater good. The possibilities opened up by NLSOMs and EOMs seem endless. Done correctly, this new line of research has the potential to address many of the grand challenges of our time.”