I did a rapid-fire exercise: What were the most significant and interesting AI developments last year?

Below is a 10-minute brain dump of what immediately came to mind, plus about an hour to flesh it out. I surely missed some important developments—with more "test-time compute," the results would probably be more accurate, but this quick-take format has its own appeal.

Here's an idea: why not try this yourself? Take 10 minutes to list what YOU think were the key AI developments of 2024. What immediately springs to mind?

🤔 Here's my quick take on the major AI developments in 2024:

#1. Test-time Compute

Revolution Started with OpenAI's "Learning to Reason" and o1, introducing a new dimension for model scaling. While not entirely new (think CNN inference with multiple augmentations of the input or Tailoring), it's having a Sputnik moment with o3, Gemini 2.0 Flash Thinking Mode, and QwQ.

2024 will be heavily focused on this.

#2. SSM Goes Mainstream

State Space Models and SSM-Transformer hybrids gained momentum. Recent developments include Bamba and Falcon3-Mamba.

For more details see the slides from my talk at DataFest Yerevan in September 2024:

#3. True LLM Competition

The field evolved from OpenAI's dominance to multiple strong players: Anthropic's Claude, OpenAI's GPT, Google's Gemini, plus robust open-source models like Llama, Gemma, and Qwen.

Personal note: Claude 3.5 Sonnet has become my go-to model.

#4. Multimodal LLMs as Standard

Leading LLMs now routinely handle text, images, and audio. GPT, Gemini, Claude, Llama — multimodality has quietly become the norm without revolutionary fanfare.

#5. Code Generation's Practical Breakthrough

LLMs for coding have become genuinely useful.

Personal experience: Built a Flutter app with Python backend in a weekend, starting from zero Flutter knowledge, using Claude/Copilot with GPT/Gemini. Also I heavily use it for typical visualization and data processing tasks. After using VSCode + Copilot or Colab with Gemini, coding without AI assistance feels notably inefficient.

Wrote about this trend in Russian Forbes back in 2017: "Why AI Will Replace Engineers". It seems we go this direction.

Key observation: The productivity gap is widening — experienced programmers become vastly more efficient with these tools, while it doesn’t help if you can’t validate a solution proposed by LLM. "The rich get richer."

#6. Video Generation's Emergence

OpenAI's Sora had a long journey from announcement to (partial) availability, but this led to multiple alternatives emerging. The field is no longer dominated by a single leader.

Video from Veo 2 blog post:

#7. Neural Networks' Nobel Recognition

John J. Hopfield and Geoffrey Hinton got the Nobel Prize in Physics and David Baker, Demis Hassabis and John Jumper in Chemistry.

Beyond the award, neural networks are transforming scientific research, with quantitative advances becoming qualitative breakthroughs.

#8. Open Models' Impact

As I feel it, open-source models maintain a ~1-1.5 years lag behind commercial leaders in terms of capabilities and quality among a large set of tasks. However, they continue to make impressive progress with new iterations of Llama, Gemma, and others, consistently pushing the boundaries of what is available off the shelf.

#9. World Models

Not to say there is a significant breakthrough, and the topic is not new (see David Ha and Schmidhuber's work on World Models and earlier), but the evolution continues.

Video generation models are, in some sense, world simulators, and I particularly like Oasis Minecraft world generation, it’s a beautiful proof of concept. It is interesting how it could influence the gaming industry. I understand that Unreal Engine and the likes contain much more, and you still need a lot of rules and determinism, but perhaps it may be a neural engine hybrid? Like gaming evolved from local gaming to cloud gaming and streaming with Geforce Now, who knows, maybe one day we might see "neuro streaming."

Notable: Danijar Hafner (the author of Dreamer, PlaNet, etc.) submitted his PhD thesis "Embodied Intelligence Through World Models" with Hinton and LeCun on the doctoral committee. I like this topic so much!

#10. KAN's Breakthrough

Kolmogorov-Arnold Networks (KANs) gained significant traction, with rapid community development. However, I am not aware of any specific killer app yet. But still interesting.

Yes, we KAN!

Authors: Ziming Liu, Yixuan Wang, Sachin Vaidya, Fabian Ruehle, James Halverson, Marin Soljačić, Thomas Y. Hou, Max Tegmark

#11. AI Agents

Agents are everywhere and it’s a multifaceted topic. Generative agents of the previous year were scaled to 1000-person simulations, multi-agent frameworks (LangChain/LangGraph, AutoGen, CrewAI) are actively evolving, and multi-agentic workflows are a natural fit for many real-life processes.

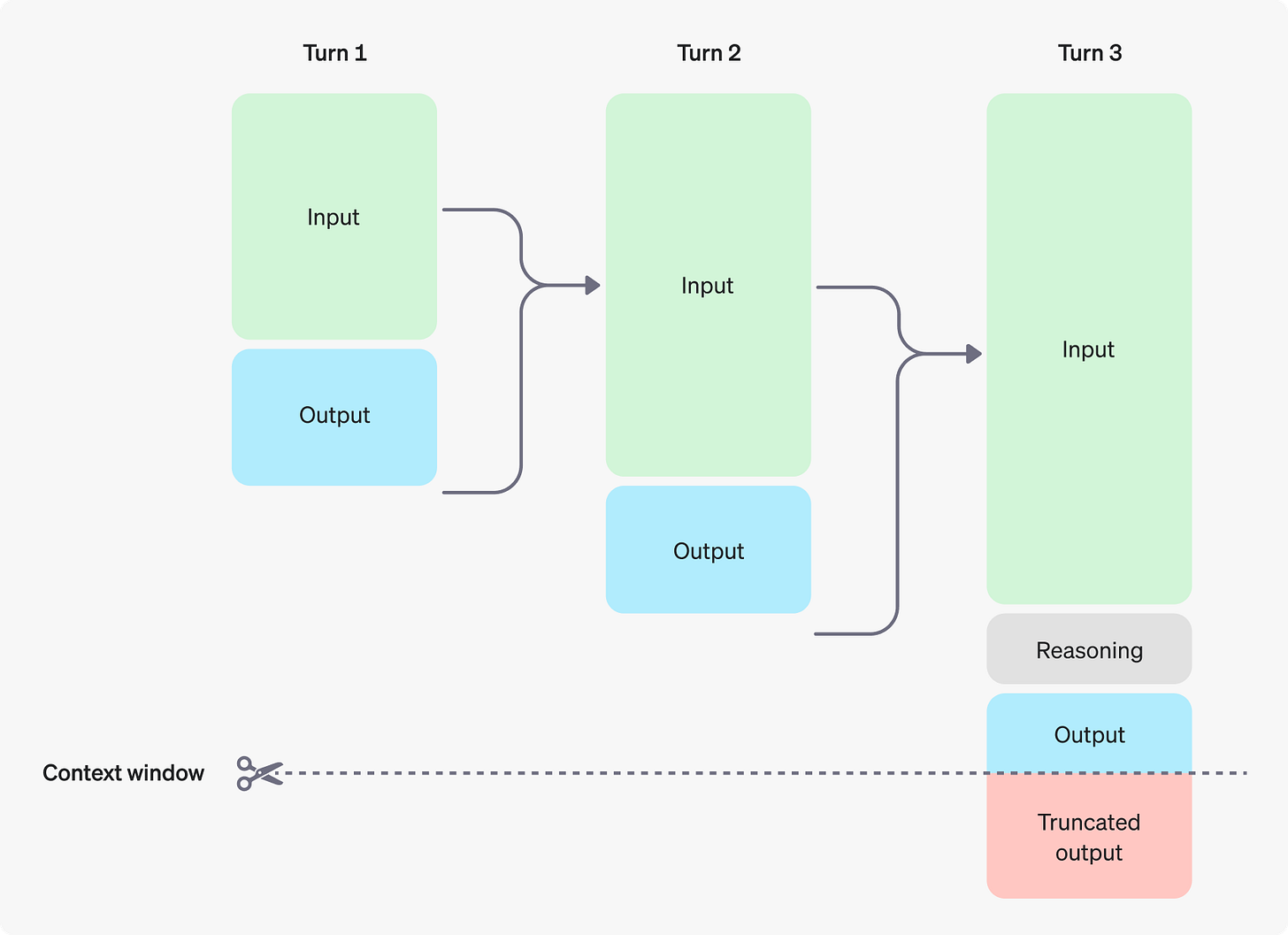

Agents and multi-agents represent the progression from basic LLMs with prompt engineering to augmented LLMs (RAG, tools) and beyond. Current LLM limitations—finite context windows (not to mention effective context windows), complex prompt handling, and dealing with conflicting roles—become manageable through modular agent systems.

It's partially the same test-time compute story as for the o1/o3 families — process data longer — but now with a sequence of agents. The tradeoff is the same: quality vs. cost and time.

Will GPT-8 replace multi-agents? While it'll improve capabilities, separation of concerns principle and the Unix philosophy of "Write programs that do one thing and do it well" remains valuable. Multi-agents offer better ownership, change management, debugging, and observability, etc. Even with GPT-8, we'll likely see multi-super-agents.

What developments would you add to this list? What stood out to you in the field this year?

🎉🎉🎉Happy New Year! 🎄🌲🌴