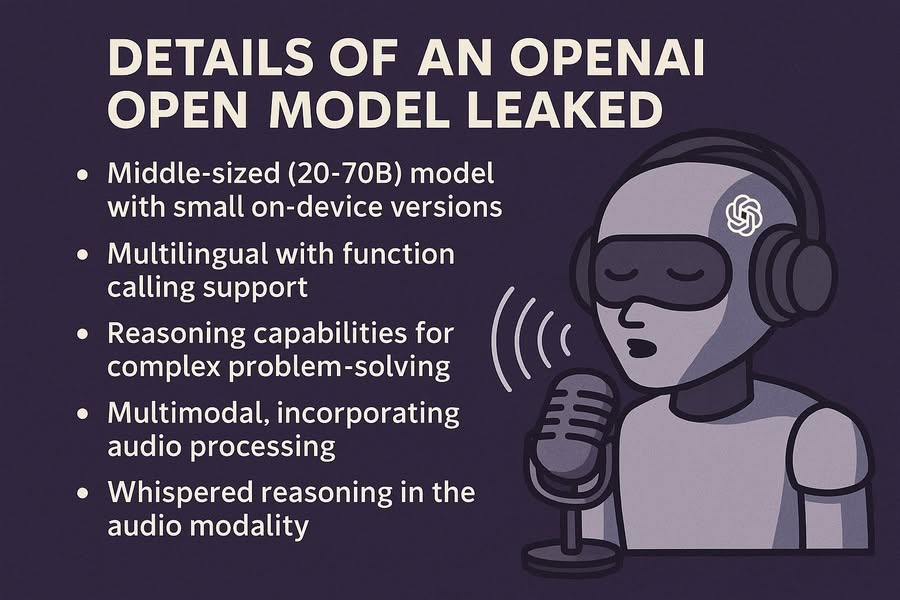

Details have allegedly leaked about OpenAI’s upcoming open model — and they’re wild. According to The Information, OpenAI already has the model in-house and is currently running safety evaluations before a public release in the coming months.

Here’s what’s (supposedly) known so far:

Mid-sized model: It’s expected to land somewhere in the 20–70B parameter range, with smaller variants in the works for on-device deployment. Yes, that means you might soon be running OpenAI-grade models on your phone.

Multilingual + function calling: Of course. It’s 2025 — this is table stakes now. Hopefully OpenAI will be more transparent than Google and actually publish the list of supported languages?

Reasoning-first: This is where things get exciting. There aren’t many mid-sized models built specifically for reasoning tasks — DeepSeek is great, but you need a fleet of H200s just to boot it up. OpenAI might be the first to bring reasoning to the masses (unless Llama beats them to it). Surely, there’s QwQ, but Chinese models will soon be banned in the US.

Multimodal... with audio: Now we’re talking. The model reportedly supports both input and output in audio form. Think of it as a hybrid between Whisper and the o3-mini model. On-device performance will be accelerated via NPU and DSP usage — especially handy for audio workloads.

Audio-based reasoning is real: And now the punchline — the model will literally reason out loud. But don’t worry, to avoid disturbing users, it will whisper its thoughts (naturally, it’s Whisper-based). If they let you customize the voice, we might see GPT-powered radio stations pop up. Or imagine it muttering through a tough logic puzzle under its breath.

Insiders say OpenAI is already testing verbal math reasoning, though the model still forgets to carry the one.

Biggest mystery: no name yet for the model.

We’re now waiting for Meta and Google to respond. Rumors say Zuckerberg has hired Eminem to fine-tune Meta’s model for freestyle reasoning.

Meanwhile, at Google DeepMind, an emergency meeting reportedly kicked off plans for bi-reasoning — where the model plays both the thinker and the critic in a debate, complete with distinct voices. (Well, it is called Gemini for a reason.)

Oh, and apparently it already exists and is being quietly tested inside NotebookLM — remember those podcast demos?