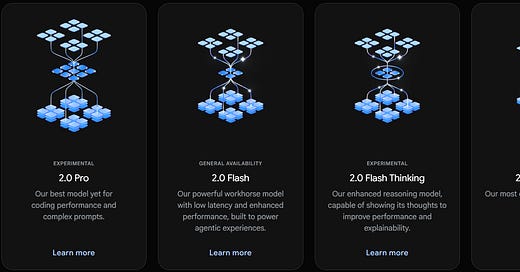

There are several interesting models in the Google’s Gemini 2.0 family:

Gemini 2.0 Flash (GA)

Gemini 2.0 Flash-Lite (Preview)

Gemini 2.0 Pro (Experimental)

Gemini 2.0 Flash Thinking (Experimental)

What’s good, they all support long context of 1M tokens (Pro supports 2M tokens). Gemini 2.0 Flash also supports Document understanding where you can add multiple PDFs to your requests. That’s exactly what is needed when you want to analyze a typical arxiv paper.

So, I decided to try it on the DeepSeek-R1 paper. The choice is pretty natural, as I recently made my own human-made review of this paper, and it would be interesting to check what Gemini can do.

I uploaded the paper into a Cloud Storage bucket (when accessing the direct link to arxiv, Gemini returned an error), and created a Colab notebook that uses this PDF in a prompt and asks a set of general questions you may want to ask when analyzing a new paper. You can find everything in the notebooks in Colab and Github (links above).

What surprised me, the results were very fast (something like 7 seconds to process a 22-pages file and answer a specific question, even without any Context caching, which only supports Gemini 1.5 family) and accurate.

Summary

The brief summary by Gemini is the following:

DeepSeek-AI introduces DeepSeek-R1, a novel approach to enhance reasoning capabilities in Large Language Models (LLMs) through reinforcement learning (RL). The research explores the potential of LLMs to develop reasoning skills without supervised fine-tuning (SFT), emphasizing self-evolution via RL.

The study starts with DeepSeek-R1-Zero, directly applying RL to a base model. It demonstrates remarkable reasoning behaviors emerging naturally through the RL process. This pure RL approach validates that LLMs can be incentivized to reason effectively without prior SFT, marking a significant milestone.

To address limitations of DeepSeek-R1-Zero, like readability and language mixing, DeepSeek-R1 incorporates a multi-stage training pipeline, including a small amount of cold-start data before RL. This involves collecting high-quality data for initial fine-tuning, followed by reasoning-oriented RL. Furthermore, new SFT data is created using rejection sampling, enhancing capabilities in various domains. After which the model undergoes another RL phase to consider prompts from all scenarios. DeepSeek-R1 achieves performance comparable to OpenAI-01-1217 on reasoning tasks.

The researchers further investigate distilling the reasoning patterns from DeepSeek-R1 into smaller, more efficient models. Distillation proves more effective than directly applying RL to smaller base models, highlighting the importance of reasoning patterns discovered by larger models. DeepSeek-AI has made DeepSeek-R1-Zero, DeepSeek-R1, and six dense models, distilled from DeepSeek-R1, openly accessible to the research community.

It’s much better than the paper's own abstract:

We introduce our first-generation reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero, a model trained via large-scale reinforcement learning (RL) without supervised fine-tuning (SFT) as a preliminary step, demonstrates remarkable reasoning capabilities. Through RL, DeepSeek-R1-Zero naturally emerges with numerous powerful and intriguing reasoning behaviors. However, it encounters challenges such as poor readability, and language mixing. To address these issues and further enhance reasoning performance, we introduce DeepSeek-R1, which incorporates multi-stage training and cold-start data before RL. DeepSeek-R1 achieves performance comparable to OpenAI-o1-1217 on reasoning tasks. To support the research community, we open-source DeepSeek-R1-Zero, DeepSeek-R1, and six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1 based on Qwen and Llama.

I especially like that the model highlighted an interesting point that distillation proved to be more effective than direct RL. I also noticed this point when reading the paper and mentioned it in my review.

The following is a list of questions (as section headers) and Gemini answers. The questions were asked sequentially, without any memory between the subsequent requests, so there is some redundancy in the answers.

What is the central problem or challenge the paper aims to address?

The DeepSeek-R1 paper addresses the challenge of incentivizing robust reasoning capabilities in large language models (LLMs) without relying on extensive supervised fine-tuning (SFT). While SFT is a common technique, the authors explore whether powerful reasoning can emerge directly through reinforcement learning (RL).

The central problem is two-fold:

Can an LLM develop complex reasoning abilities through RL alone, bypassing the need for labeled reasoning data? This is investigated with DeepSeek-R1-Zero, a model trained solely with RL.

How can an RL-trained LLM be further improved to achieve human-aligned reasoning and strong general capabilities? This is addressed with DeepSeek-R1, which incorporates a small amount of "cold-start" data and a multi-stage RL pipeline.

The paper also delves into the problem of efficiently transferring reasoning capabilities to smaller models via distillation, allowing them to achieve better performance than RL-trained small models. Ultimately, the paper seeks to demonstrate a comprehensive methodology for developing highly capable reasoning LLMs through RL and distillation, reducing the reliance on resource-intensive supervised methods.

Why is this problem important and what gap in the current literature does the paper fill?

This paper addresses a critical challenge in the field of large language models (LLMs): incentivizing reasoning capability without relying heavily on supervised fine-tuning (SFT). The problem is important because SFT, while effective, requires extensive human-annotated data, which is expensive and limits scalability and potentially introduces bias. Furthermore, relying primarily on SFT may stifle the model's ability to explore novel reasoning strategies independently.

The paper tackles this by introducing DeepSeek-R1, trained through reinforcement learning (RL), demonstrating remarkable reasoning capabilities without initial SFT. This underscores that reasoning can emerge organically through RL, unlocking significant potential for self-evolution in LLMs. Addressing the limitation of readability and language mixing of DeepSeek-R1-Zero, DeepSeek-R1 incorporates multi-stage training and cold-start data before RL.

The paper fills a significant gap in the current literature by:

Validating RL-Only Reasoning: Explicitly demonstrating that pure RL can successfully incentivize sophisticated reasoning behaviors in LLMs, challenging the prevalent reliance on SFT.

A Novel Training Pipeline: Introducing a multi-stage training pipeline, which allows the models to improve reasoning patters and align with human preferences.

Distillation Insights: Demonstrating that distilling reasoning patterns from large, RL-trained models can lead to smaller, highly capable models, which are beneficial for downstream tasks and accessibility to a broader research community. The release of the distilled models allows researchers to continue to improve them.

In essence, the paper pushes the boundaries of LLM training by showcasing the power of RL in fostering autonomous reasoning and introducing new possibilities for creating more efficient reasoning models.

What are the key contributions and innovations introduced in the paper?

The paper "DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning" presents several key contributions to the field of language model reasoning.

Pure RL-based Reasoning: It demonstrates that strong reasoning capabilities can emerge in LLMs through reinforcement learning (RL) without supervised fine-tuning (SFT). This is significant because it validates that reasoning can be incentivized directly through RL, reducing reliance on expensive curated datasets. The initial model, DeepSeek-R1-Zero, showcases this emergent reasoning.

Multi-Stage RL Pipeline: The paper introduces a novel multi-stage training pipeline for DeepSeek-R1. This involves an initial cold-start SFT with high-quality reasoning examples, followed by reasoning-oriented RL, then rejection sampling for SFT data creation and subsequent RL aligning model with human preferences.

Distillation of Reasoning Skills: It demonstrates that reasoning patterns learned by larger models can be effectively distilled into smaller, more efficient models through SFT. The paper opensources distilled models based on Qwen and Llama, significantly surpassing existing open-source alternatives on reasoning benchmarks.

Novel Application of GRPO: The paper demonstrates the efficient use of Group Relative Policy Optimization (GRPO) to accelerate the training phase of RL.

In essence, the paper highlights RL as a powerful tool for developing reasoning capabilities, offers a practical training methodology and emphasizes the value of distilling knowledge from larger to smaller models.

How does the proposed approach differ from or improve upon previous methods?

The DeepSeek-R1 paper presents advancements in incentivizing reasoning capabilities in LLMs via reinforcement learning (RL), differing from previous methods in several key aspects.

Firstly, DeepSeek-R1-Zero demonstrates that LLMs can develop remarkable reasoning skills through pure RL, without relying on supervised fine-tuning (SFT) as a preliminary step. This contrasts with many prior works that typically use SFT to bootstrap reasoning abilities before RL alignment. This highlights RL's potential to independently discover reasoning patterns.

Secondly, DeepSeek-R1 incorporates a multi-stage training pipeline involving cold-start data, reasoning-oriented RL, rejection sampling, and a second SFT and RL stage focused on general capabilities (helpfulness/harmlessness). This holistic approach contrasts with simpler, single-stage RL fine-tuning strategies found in some prior works.

Thirdly, the paper emphasizes distilling reasoning capabilities into smaller models post-RL, demonstrating that the discovered reasoning patterns from larger models can be effectively transferred. Direct distillation from DeepSeek-R1 outperformed RL on smaller models.

Finally, while previous work explores process-based reward models (PRM) and Monte Carlo Tree Search (MCTS), the authors found them less effective compared to rule-based accuracy and language consistency rewards during RL and the improved performance by applying the distillation approach.

What is the overall framework or algorithm used in this work?

The paper introduces DeepSeek-R1, a language model (LLM) designed to enhance reasoning capabilities through Reinforcement Learning (RL). The core framework revolves around a multi-stage training pipeline leveraging both supervised fine-tuning (SFT) and RL.

Initially, a base model (DeepSeek-V3-Base) undergoes SFT using a small dataset of high-quality, long Chain-of-Thought (CoT) examples. This "cold start" aims to provide a foundation for reasoning. The model then enters a reasoning-oriented RL phase, optimizing for accuracy and language consistency in tasks like coding, mathematics, and logic. GRPO method is used for saving the cost of RL training.

Following RL convergence, a rejection sampling technique is employed on the resulting checkpoint to generate new SFT data covering various domains, including writing, role-playing, and general-purpose tasks. This expanded dataset is used for another round of SFT on DeepSeek-V3-Base.

Finally, a second RL stage is implemented, accounting for prompts from diverse scenarios to improve helpfulness and harmlessness while refining reasoning skills. This iterative process leads to the DeepSeek-R1 model.

The work also explores distillation, where the learned reasoning patterns of DeepSeek-R1 are transferred to smaller, open-source models (Qwen and Llama) through direct fine-tuning on the generated data.

Overall, the framework relies on an iterative approach alternating between supervised fine-tuning and reinforcement learning to improve both reasoning capabilities and human alignment for the models.

Are the technical details (e.g., training methods, architecture, optimization strategies) clearly explained and justified?

The DeepSeek-R1 paper provides a reasonable level of detail regarding its technical aspects, but with some areas warranting further clarity.

Strengths: The paper clearly outlines the two-stage training approach, highlighting the use of GRPO for RL and incorporating cold-start data. The motivation for using rule-based rewards in DeepSeek-R1-Zero to avoid reward hacking is well-justified. The data curation process for cold-start and SFT, including the emphasis on readability and filtering, is also described.

Areas for Improvement: While GRPO is mentioned, a more in-depth explanation of its implementation specifics (e.g., group size, baseline estimation) would benefit experts. Similarly, details on the architecture of DeepSeek-V3-Base, which serves as the foundation, are missing. The language consistency reward is introduced, but the method of calculation and its weighting within the overall reward function could be elaborated upon. Additionally, information on hyperparameter tuning strategies and compute resources used for training would be beneficial.

Overall: The paper strikes a balance between providing sufficient technical details to understand the methodology and maintaining conciseness. While certain aspects could be further elaborated, the core techniques and their justifications are presented clearly enough for AI/ML experts to grasp the overall approach. The paper's focus on high-level innovations and empirical results likely drove the decision to omit excessively granular technical specifications.

How does the paper balance theoretical insights with practical implementation (e.g., use of reinforcement learning, supervised fine-tuning, or a hybrid approach)?

The DeepSeek-R1 paper effectively balances theoretical reinforcement learning (RL) concepts with practical language model implementation through a multi-stage training pipeline.

Theoretically, the paper explores the potential of RL for emergent reasoning without supervised fine-tuning (SFT), demonstrating that RL alone can incentivize complex reasoning behaviors in LLMs (DeepSeek-R1-Zero). This addresses the fundamental question of whether reasoning can arise purely from reward-driven learning, bypassing the need for curated datasets.

Practically, the approach is implemented using the GRPO algorithm to improve model performance in reasoning. To mitigate issues from pure RL like poor readability and language mixing, the paper introduces DeepSeek-R1 which incorporates a cold-start phase of limited supervised data, as well as multi-stage training.

This hybrid approach blends theoretical RL with the practical benefits of SFT to achieve state-of-the-art results. The paper also explores distillation, transferring the reasoning capabilities of larger models into smaller, more efficient ones through supervised fine-tuning.

Overall, the paper demonstrates a keen awareness of both the theoretical underpinnings of RL and the pragmatic considerations necessary for deploying high-performing language models, striking a balance between exploration and exploitation in the design of its training methodology.

What datasets, benchmarks, or tasks are used to validate the proposed method?

The DeepSeek-R1 paper validates its approach to incentivizing reasoning capabilities in LLMs using a comprehensive suite of datasets and benchmarks, encompassing reasoning, coding, and knowledge-based tasks.

For reasoning tasks, the models were evaluated on:

AIME 2024

MATH-500

CNMO 2024

For coding-related tasks, they used:

Codeforces (percentile and rating)

LiveCodeBench

SWE-bench Verified

Aider-Polyglot

Knowledge-based evaluation included

MMLU,

MMLU-Redux

MMLU-Pro

GPQA Diamond

SimpleQA

FRAMES

The paper also includes benchmarks such as AlpacaEval 2.0 and Arena-Hard to evaluate open-ended generation and alignment with human preferences. The focus is on showing superior or competitive performance against established baselines like OpenAI-01-mini, OpenAI-01-1217, DeepSeek-V3, and other open-source models.

Are the experiments designed rigorously to support the authors' claims?

The DeepSeek-R1 paper presents compelling results, and while the experimental design is generally sound, there are areas that could benefit from greater rigor.

Strong points include the comprehensive benchmark suite covering various reasoning tasks (math, coding, knowledge). The comparison against strong baselines, including OpenAI's models, provides valuable context. The ablation studies on the impact of different training stages (RL, SFT) are informative.

However, the paper could be strengthened by:

More detailed hyperparameter tuning information. The paper lacks specifics regarding hyperparameter selection for both SFT and RL stages. Reporting a wider range of values would allow for reproducibility.

Analysis on the impact of data contamination. The benchmarks used are common, which raises concerns regarding data contamination. The authors should address the possible overlap between training data and evaluation sets.

Statistical significance testing. While accuracy is reported, statistical significance testing is largely absent. Including p-values would help confirm if the reported differences are statistically significant.

Fairness and Bias analysis. Analysis of the models fairness and potential biases is missing.

Overall, the experimental design adequately supports the authors' claims, but more details concerning hyperparameter tuning, data contamination, statistical significance, and fairness would be beneficial.

How does the performance compare with state-of-the-art methods or established baselines?

The DeepSeek-R1 paper presents a significant advancement in reasoning capabilities for large language models (LLMs), achieving performance comparable to OpenAI's o1-1217 on reasoning tasks. Here's a breakdown of its comparative performance against baselines:

DeepSeek-R1 vs. OpenAI o1-1217:

Comparable performance on tasks like MATH-500 (97.3% Pass@1) and AIME 2024 (79.8% Pass@1), slightly surpassing

o1-1217on AIME.Excellent knowledge, but slightly below of

o1-1217on MMLU, MMLU-Pro, and GPQA Diamond benchmarks

DeepSeek-R1 vs. DeepSeek-V3 (and other closed-source models):

Superior performance on knowledge benchmarks (MMLU, GPQA), FRAMES, and the factual benchmark SimpleQA. Outperforming the base model (DeepSeek-V3) by significant margins.

Demonstrates enhanced performance on the instruction-following IFEval.

DeepSeek-R1-Zero vs. OpenAI o1-0912:

After RL DeepSeek-R1-Zero significantly increases the pass@1 score on AIME 2024 reaching comparable score with OpenAI-01-0912

Distilled DeepSeek-R1 models vs. Open-Source LLMs:

Distilled smaller dense models (Qwen and Llama series) significantly outperform previous open-source models, such as QwQ-32B-Preview, with the distilled 14B setting a new performance benchmark.

Overall:

DeepSeek-R1 demonstrates robust performance that rivals current state-of-the-art commercial models, while also providing open-source models competitive with or exceeding other comparable open source.

Are there any ablation studies or error analyses that help understand the factors driving the performance?

Based on the DeepSeek-R1 paper, while it doesn't explicitly label sections as "ablation studies" or "error analyses" in the traditional sense, there are several elements that contribute to understanding the factors driving performance:

Comparison of DeepSeek-R1-Zero vs. DeepSeek-R1: Comparing the performance of the zero-shot RL model (R1-Zero) against the model with cold-start data (R1) highlights the impact of even a small amount of high-quality supervised data on reasoning and convergence speed.

Language Consistency Reward: Introducing a language consistency reward during RL training demonstrates a trade-off: slight performance degradation in benchmarks but improved readability, suggesting a controllable balance between accuracy and human preference.

Distillation Analysis: The paper compares RL vs SFT-based distillation, and it provides performance comparisons of models trained with both strategies. This highlights that distilling larger pre-trained models to smaller ones, followed by Supervised fine-tuning, yields more improved results.

Unsuccessful Attempts: Discussing failures with PRM and MCTS provides insights into the challenges of fine-grained reward modeling and scalable test-time computation in reasoning. This implicitly points to the effectiveness of the chosen RL approach with rule-based rewards and cold-start data.

While a formal error analysis (e.g., categorizing failure cases) is absent, these elements collectively provide a nuanced understanding of the individual contributions of different training stages and techniques to the final DeepSeek-R1 performance.

Does the paper provide insights into why the proposed method works (e.g., through qualitative examples, 'aha moments', or emergent behaviors)?

Yes, the paper provides several insights into why DeepSeek-R1 achieves improved reasoning capabilities.

"Aha" Moments and Emergent Behaviors: The paper highlights the emergence of "aha moments" during DeepSeek-R1-Zero training. These moments represent instances where the model autonomously learned to allocate more thinking time by reevaluating its initial approach to a problem. The spontaneous emergence of reflection (revisiting and reevaluating previous steps) and exploration of alternative problem-solving approaches as test-time computation increased are also presented as key emergent behaviors.

Increased Thinking Time: The analysis reveals that DeepSeek-R1-Zero naturally learns to allocate more thinking time to reasoning tasks. This intrinsic development allows the model to solve increasingly complex problems by generating hundreds to thousands of reasoning tokens, facilitating a more thorough exploration of thought processes.

Distillation Benefits: The paper demonstrates that the reasoning patterns discovered by larger models like DeepSeek-R1 can be effectively distilled into smaller models. These distilled models outperform those trained with RL alone, suggesting that the specific reasoning patterns learned are crucial for improving capabilities.

How well can the approach be interpreted or explained to a non-expert audience?

The DeepSeek-R1 paper presents advancements in language model reasoning capabilities through reinforcement learning (RL). While the technical details (GRPO, reward modeling, distillation) are complex, the core idea can be communicated effectively to a non-expert audience.

The key concept is training AI models to "think better" through a system of rewards, similar to training a pet with treats. Instead of direct instruction (supervised learning), the model learns to solve problems by trying different approaches and receiving "rewards" for correct answers and well-structured reasoning. DeepSeek-R1 builds upon this by incorporating a "cold start" – a basic understanding before the reward system is applied – analogous to teaching a child some basic rules before letting them explore.

Furthermore, the paper explores "distillation," which is like extracting the knowledge from a highly experienced teacher (large model) and transferring it to a smaller, more efficient student (smaller model). This makes the sophisticated reasoning abilities accessible in smaller, more practical AI models.

The results show improved performance on challenging reasoning tasks, demonstrating the effectiveness of the RL approach. While the inner workings are opaque, the high-level concepts of incentivizing reasoning and knowledge transfer are readily understandable, making the approach explainable in principle, even if specific implementation details remain inaccessible to non-experts. The potential for improved reasoning capabilities in AI systems is the central takeaway.

What are the current limitations or potential weaknesses of the approach?

The DeepSeek-R1 paper presents a compelling approach to incentivizing reasoning capabilities in LLMs through reinforcement learning. However, the approach has limitations and weaknesses.

Generalization & Scaling of RL: The RL training appears heavily focused on specific benchmarks. Over-optimization on these benchmarks might lead to reduced generalization to unseen reasoning tasks or real-world scenarios. The paper also notes the heavy compute requirements of large-scale RL.

Dependence on Base Model and Data Quality: The success hinges on a strong base model (DeepSeek-V3) and the quality of the cold-start data used for fine-tuning. Biases or limitations within these components could propagate into the final model. Though attempts are made to filter, outputs may still be challenging to read and contain long paragraphs.

Lack of Rigorous Ablation Studies: While the paper describes a multi-stage training pipeline, detailed ablation studies isolating the impact of each stage (cold-start data, reasoning-oriented RL, rejection sampling) are somewhat lacking. This makes it harder to understand the individual contribution of each element.

Potential for Reward Hacking: Though the authors avoided neural reward models, reliance on rule-based rewards can still lead to reward hacking if not carefully designed and monitored.

Sensitivity to Prompting: The paper mentions the model's sensitivity to prompting strategies. This requires expert knowledge to use effectively.

Language Mixing & Other Tasks: Language mixing remains a problem, as well as challenges in tasks such as function calling, multi-turn, complex role-playing, and JSON output.

Are there suggestions for future research or open questions that remain?

Yes, the DeepSeek-R1 paper highlights several avenues for future research and open questions:

Improving General Capabilities: The paper identifies limitations in tasks like function calling, multi-turn conversations, complex role-playing, and generating JSON output compared to DeepSeek-V3. Future work could explore how to better leverage long Chain-of-Thought (CoT) reasoning to enhance performance in these areas.

Addressing Language Mixing: The model currently struggles with language mixing issues when handling queries in languages other than Chinese and English. Research is needed to improve multilingual reasoning capabilities and ensure consistent language use across different contexts.

Prompt Engineering Sensitivity: The model's performance is sensitive to prompting styles, with few-shot prompting degrading results. Investigating more robust prompting techniques and developing methods for zero-shot generalization are crucial.

Software Engineering Tasks: Scaling reinforcement learning to improve performance on software engineering benchmarks is a challenge due to long evaluation times. Future research should explore rejection sampling on software engineering data and asynchronous evaluation methods to improve efficiency.

RL HF tradeoff: While fine-tuning RL increases performance, in the Chinese SimpleQA benchmark, DeepSeek-R1 performs worse than DeepSeek-V3 due to refusal to answer queries after the safety RL. Without safety RL, DeepSeek-R1 could achieve an accuracy of over 70%. Thus, what is the optimal balance between RL and HF?

What are the broader implications of the work for the research community, industry, or society?

This DeepSeek-R1 research, centered on incentivizing reasoning in LLMs through reinforcement learning, has significant implications:

Research Community: The findings demonstrate that strong reasoning capabilities can emerge organically in LLMs via RL without relying on initial supervised fine-tuning. This challenges the conventional wisdom and opens up a new avenue for exploration. The open-sourcing of the models and datasets enables further investigation into RL-driven reasoning, cold-start strategies, and distillation techniques.

Industry: The success of distillation in transferring reasoning abilities to smaller, more efficient models is highly relevant to industry. Deploying smaller models with strong reasoning capabilities is cost-effective and energy-efficient. The methodology offers a pathway to create specialized, high-performing models for specific reasoning-intensive applications (coding, mathematics, scientific reasoning). Furthermore, the robust multi-stage training pipeline and the discovery of key training components (cold-start data, language consistency reward) offers a valuable roadmap for companies aiming to build LLMs.

Society: Improved reasoning capabilities in LLMs can lead to more reliable and accurate AI-driven tools. Education, problem-solving, and decision-making tasks can potentially be significantly improved by RL and distillation. Furthermore, the focus on alignment with human preferences during reinforcement learning and improving the helpfulness and harmlessness aspects of these LLMs has the potential to improve AI interactions in daily life.

Are ethical, safety, or deployment considerations discussed in the paper?

Yes, ethical, safety, and deployment considerations are discussed in the DeepSeek-R1 paper.

Ethical Considerations: The paper acknowledges the potential for language mixing issues, particularly when handling queries in languages other than Chinese and English. There is a discussion that DeepSeek-R1 might default to English for reasoning and responses, even if the original query was in another language. The authors also acknowledge potential safety RL related refusals to answer specific queries, demonstrating the importance of responsible AI development.

Safety Considerations: The authors emphasize aligning the model with human preferences through a secondary reinforcement learning stage, explicitly aiming to improve the model's helpfulness and harmlessness. They evaluate the model's entire response, including reasoning and summaries, to identify and mitigate potential risks, biases, or harmful content.

Deployment Considerations: The paper highlights DeepSeek-R1's sensitivity to prompting styles, noting that few-shot prompting can degrade performance. They recommend that users directly describe the problem and specify the output format using a zero-shot setting for optimal results. This guidance aims to ensure users can effectively leverage the model's capabilities. Additionally, the discussion of distilling the reasoning capability to small dense models and open-sourcing them shows the commitment to the community.

Is the work reproducible? Are the code, data, and experimental settings sufficiently detailed or openly available?

Based on the paper "DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning," here's an assessment of reproducibility:

Strengths (Towards Reproducibility):

Model Release: The authors open-source DeepSeek-R1-Zero, DeepSeek-R1, and distilled models (1.5B-70B parameters). This is a major boon for reproducibility.

Algorithm Detail: GRPO is described with supporting mathematical equations, the reinforcement learning algorithm is adequately detailed.

Training Data Details: A description for creating the cold start, SFT, and RL data. The data is fine-tuned starting with the DeepSeek-V3-Base model.

Benchmark Datasets: Standard benchmarks used for evaluation are publicly accessible (MMLU, GPQA, etc.). Evaluation prompts can be derived from DeepSeek-V3.

Weaknesses (Hindering Reproducibility):

Complete Experimental Settings: Though some settings are specified, a full comprehensive disclosure of exact hyperparameters and training configurations would be highly valuable.

Reward model details: The rule-based reward models and the generative reward model using DeepSeek-V3 are lacking.

Computational Resources: The scale of training (model sizes, hardware) is not fully specified, making replication challenging for researchers with limited resources.

Conclusion:

The release of models is a major strength. However, full reproducibility would benefit from a complete specification of the training configuration and specifics of the training data and reward models. Researchers can use the released checkpoints for downstream applications but may struggle to precisely replicate the training process from scratch.

I’d say the answers are good (and fast!).

Some things can be improved, e.g. DeepSeek-V3-Base or GRPO details can be provided as separate papers into the prompt, but it’s about better orchestration and prompting, not about the model itself.

It’s a pity that the capability support across the Gemini family is uneven, PDFs are supported only in 2.0 Flash, reasoning is supported only by a different Flash Thinking model, and content caching is only supported by the 1.5 family. As some of our questions assume reasoning, we may expect even better results with Flash Thinking model.

Overall, I’m satisfied with the results. It does not replace the complete paper review diving into all interesting technical details, but that’s just the start. Better reasoning models with PDF support and larger output size, and a multi-agent system with specialized agents can make a difference.

Google Cloud credits are provided for this project.

Grigory, is this true even when accessing Gemini 2.x models through Google AI Studio?

"It’s a pity that the capability support across the Gemini family is uneven, PDFs are supported only in 2.0 Flash, reasoning is supported only by a different Flash Thinking model, and content caching is only supported by the 1.5 family."