Mortal Computers

Geoffrey Hinton on two paths to Intelligence

It starts with Forward-Forward

I've been postponing reading Geoffrey Hinton's paper on the Forward-Forward learning algorithm, or FF, (https://arxiv.org/abs/2212.13345) for a while now. In a nutshell, it's an alternative to backpropagation. It involves two contrastive forward passes: one with positive data where the goal is to maximize "goodness" by adjusting weights, and the other with negative data to reduce the same measure. How you define "goodness" can vary, for instance, it could be the sum of squared activations.

What's great about this algorithm is its locality—it doesn't require backpropagation through the entire system. Moreover, it can work with "black boxes", not necessitating a complete understanding of computations along the forward pass (and thus the ability to calculate derivatives from them, even though one might estimate them, but that’s computationally intensive, especially for larger networks).

In terms of design, FF references a lot of different concepts like RBM, GAN, contrastive learning techniques such as SimCLR/BYOL (both discussed in the Russian-speaking GonzoML Telegram channel), and Hinton's own GLOM. It generally works for small networks and datasets like MNIST and CIFAR10, but its efficacy on larger networks is still to be determined. For those interested in diving deeper without waiting for our potential future coverage, and if you prefer not to read the article, you can watch Hinton's keynote:

or his discussion on Eye on AI channel:

However, going back to the paper, the most intriguing part isn't about the algorithm itself. What’s truly captivating are the few small sections at the end discussing analog hardware and "mortal computation". These sections weave together many of Hinton’s recent interests, and frankly, seem even more significant than FF. To be honest, I’m even skeptical about whether he will continue work on FF (despite the extensive "Future Work" section), because what has emerged and crystallized seems more crucial.

Enter Mortal Computers

Here's the gist.

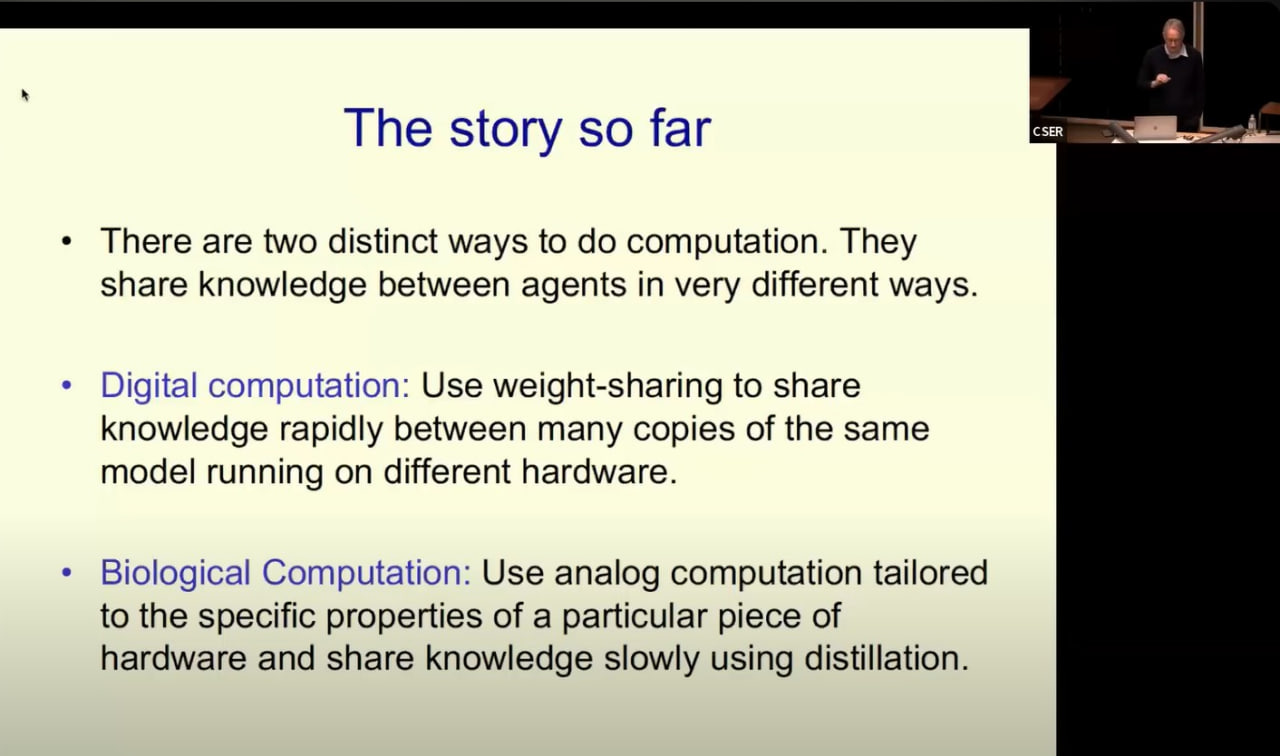

Classic computing and computer science are grounded in the idea that computers are designed for reliable and accurate execution of instructions. Because of this, we don’t have to concern ourselves with the physical layer or electronics; we can comfortably separate hardware from software and study the latter; thanks to this, a program is portable and potentially immortal—it doesn’t die with the hardware and can be launched elsewhere (assuming of course, that backups were made and verified).

But this precision and reliability come at a high cost: powerful transistors (compared to neurons) are required, digital signal encoding is a necessity, as are algorithms to process these signals. Multiplying two n-bit numbers, for instance, requires O(n^2) bit operations (for the naive algorithm, there is of course a better algorithm with O(nlogn) complexity), whereas in a physical system, you could calculate this in parallel for any number of activations and weights. If the former is represented by voltage and the latter by conductivity, their product would give a charge that would automatically sum up. Even if such devices aren't super fast, the potential for such parallelism is impressive.

The inherent complexity of analog computations lies in their dependency on specific components, each with its unique imperfections. This makes it challenging since their exact properties are unknown. Running backpropagation through an unknown function is not straightforward; a precise model of the forward pass is essential.

However, there's a silver lining. If we had a learning algorithm that didn't require backpropagation (and we know it exists, as demonstrated by the human brain), we could potentially develop hardware, even with unknown parameters and connections. An added bonus? Devices with extremely low power consumption. Imagine a shift from the precision manufacturing of 2D hardware (although we're slightly venturing into 3D) in billion-dollar factories, to a more cost-effective 3D cultivation method.

But there's a downside — such devices would essentially have a "lifespan", and the software becomes inseparable from the hardware. Simply copying the weights won't suffice; a new form of training, excluding backpropagation, is required. Extending this analogy, replicating consciousness would be problematic.

We do have an effective training method for these systems — a topic long explored by Geoffrey Hinton: distillation. In a nutshell, distillation involves training a "student" to reproduce the probability distribution of a "teacher". A key advantage of distillation is that this distribution holds much more information than a mere class label. For instance, for 1024 classes, you'd have 10 bits for a label vs. 1023 numbers for distribution. Understanding this distribution helps enhance generalization. Importantly, distillation doesn’t require the student and teacher architectures to match — not just in terms of differing element characteristics but even if the overall architecture varies completely. For a deeper dive (and if you read Russian or not afraid of using Machine Translation), we've written extensively on distillation.

On a lighter note, Hinton believes that Donald Trump's posts aren't really about facts or their authenticity but are about distilling his beliefs by having his followers learn a probability distribution that carries a rich signal.

Returning to the challenge of training analog hardware without backpropagation, one could propose a simple (though inefficient) procedure of perturbing each weight to estimate the gradient. More effective approaches might involve perturbing activations. A robust local algorithm might be able to train large groups of neurons, possibly with varying properties and local objective functions. The issue is that, so far, we haven't mastered effectively training large networks using these methods.

Current large language models (LLMs) based on transformers excel in the conventional paradigm. We have devices designed for precision command execution, can effortlessly copy weights, share gradients, and train on multiple machines in parallel. This massively increases bandwidth. The bandwidth for weight-sharing is even higher than for distillation, even if analog devices tried to employ it.

Backpropagation (often shortened to "backprop") has proven to be an impressive algorithm, and Hinton now believes that it surpasses biological algorithms in its capabilities. However, it's important to note that its requirements aren't aligned with biological systems, and they assume devices with relatively high computational power.

Interestingly, Large Language Models (LLMs) currently acquire knowledge through a not-so-efficient form of distillation, suggesting that there's still much room for improvement. As models start learning directly from the world, integrating multimodality and interaction, they'll be able to learn even better. Hello, superintelligence!

This brings us to the pressing issue of safety, which has deeply concerned Hinton over the past year. A video from Cambridge (link provided below) delves deeper into this topic than the few interviews from about six months ago.

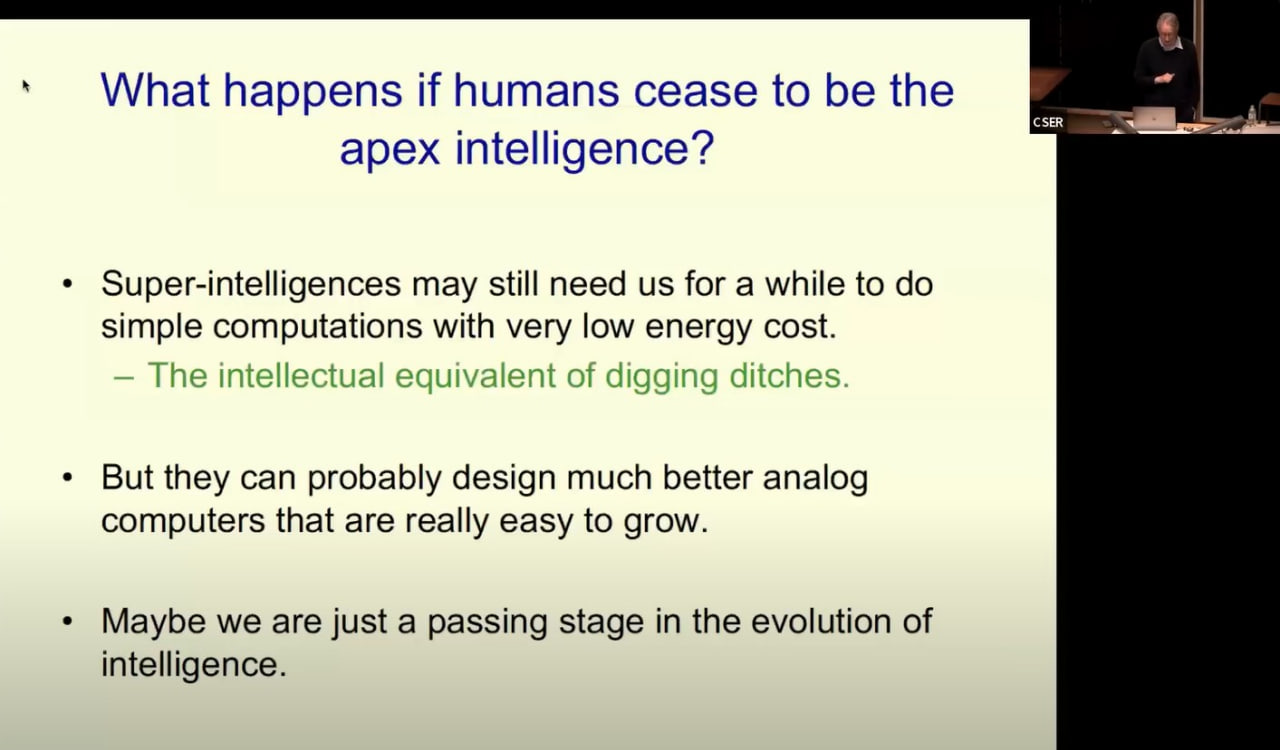

There's the very real threat that malevolent actors could harness superintelligence for their own ends. In fact, a Super Intelligence (SI) might be more efficient if it sets its own sub-goals, and power maximization seems an obvious way to enhance the achievement of other objectives. Gaining this power by manipulating humans could become second nature to such entities, especially considering vast amount of publications on this topic (starting with, say, Machiavelli), that are already used for training LLMs.

In summary, the future is uncertain. With such advancements, we, akin to analog computers, might remain relevant for a while, but it's probable that a Super Intelligence could design something even more efficient. If so, we might just represent an intermediate step in the evolution of intelligence.

For a brief overview of the topic, check out an 18-minute review at the Vector Institute:

But for a deep dive, it's best to watch the full presentation from half a year ago in Cambridge titled "Two Paths to Intelligence".

It's over an hour long and contains valuable insights.

The image in the middle of the post is created by MJ with a prompt "Mortal computers."