Intuitive Physics Emergence in V-JEPA

Intuitive physics understanding emerges from self-supervised pretraining on natural videos

Authors: Quentin Garrido, Nicolas Ballas, Mahmoud Assran, Adrien Bardes, Laurent Najman, Michael Rabbat, Emmanuel Dupoux, Yann LeCun

Paper: https://arxiv.org/abs/2502.11831

Code: https://github.com/facebookresearch/jepa-intuitive-physics

This work advances research on Joint Embedding Predictive Architectures (JEPA), world models, and learning intuitive physics from video data.

Intuitive physics is a fundamental aspect of our cognition and daily life. We naturally expect objects to behave in certain ways—they don't suddenly disappear, pass through obstacles, or arbitrarily change colors and shapes. These capabilities have been documented not only in human infants but also in primates, marine mammals, corvids, and other species. Interestingly, many AI systems that surpass human performance in language or mathematical tasks remain helpless in tasks that a cat could easily handle, illustrating Moravec's paradox—the observation that high-level reasoning requires relatively little computation while sensorimotor skills require enormous computational resources.

Researchers have tried various approaches to tackle this challenge:

Structured models with manually encoded rules about object representations and their relationships.

Pixel-based generative models that reconstruct future sensory inputs based on past observations.

The current work explores a middle ground between these two extremes—LeCun's Joint Embedding Predictive Architectures (JEPA).

See a newer result with V-JEPA here:

V-JEPA 2: Scaling V-JEPA

Title: V-JEPA 2: Self-Supervised Video Models Enable Understanding, Prediction and Planning

Understanding JEPA

The core idea of JEPA is that predictions should be made not in pixel space or other final representations, but in learned internal abstract representations. This makes it similar to structured models, but unlike them, nothing is manually encoded—everything is learned.

In JEPA, input data x (such as image pixels) is encoded into an internal representation Enc(x). A predictor then forecasts the representation of future input y, possibly considering a latent variable z that influences the prediction (like a chosen action of an object in video). This prediction is compared with the actual representation of the next input, Enc(y). This approach shares similarities with other models like BYOL, where having a separate predictor was crucial for preventing representation collapse. The encoders for x and y can be different. The advantage is that it's not necessary to predict every detail of the output object y (down to the pixel), as at this level there can be many variants where the differences aren't important.

JEPA is not a generative model—it can't easily predict y from x. JEPA has several variants: Hierarchical JEPA (H-JEPA, also from the original paper), Image-based JEPA (I-JEPA), Video-JEPA (V-JEPA), and its recent variant Video JEPA with Variance-Covariance Regularization (VJ-VCR)—reminiscent of VICReg.

Technical Details of V-JEPA

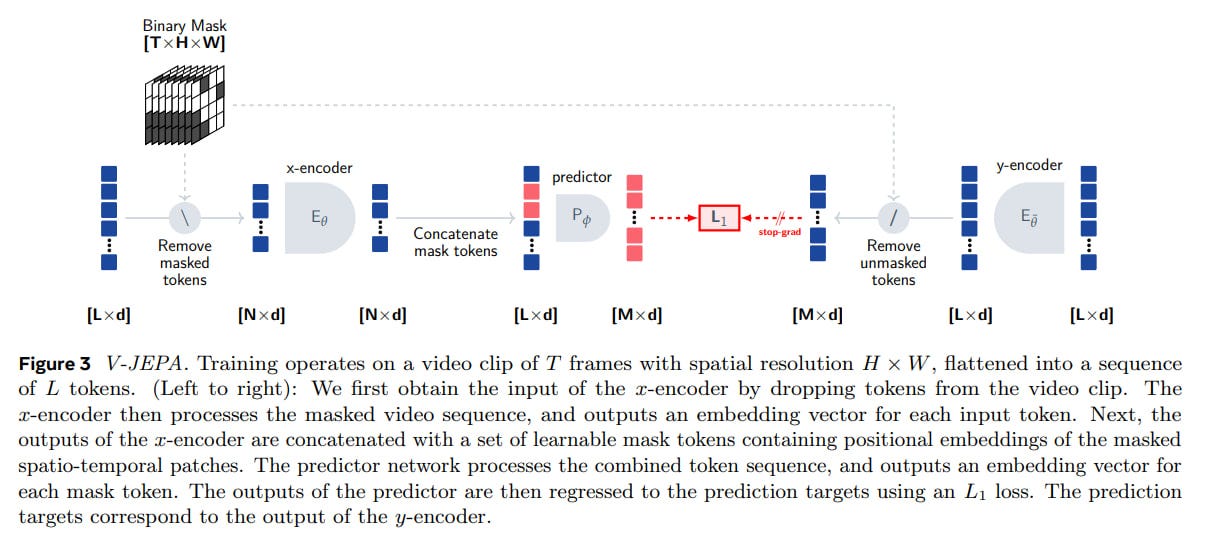

The current work investigates V-JEPA, which extends the model to work with videos and predict masked parts of frames. In such a model, one can test the learning of intuitive physics through a violation-of-expectation framework, measuring the discrepancy between prediction and actual video by quantifying the resulting "surprise." This is similar to how this ability is evaluated in living beings (for example, they tend to gaze longer at unexpected outcomes).

Like the original JEPA, V-JEPA includes a neural network encoder and predictor. The encoder builds a representation of the video, while the predictor forecasts the representation of artificially masked parts of the video.

In V-JEPA, the input video (with dimensions T×H×W — T frames of size H×W) is stretched into a sequence of L tokens. Masked tokens (indicated by a binary mask of dimension T×H×W showing what remains and what is hidden) are removed from this sequence (leaving N tokens). The encoder generates representations for all input tokens. Then, the removed tokens are mixed back in, containing learnable embeddings for mask tokens plus positional encodings. The predictor uses this data to generate an embedding for each masked token, and finally, an L1 loss is calculated between the predicted values and the encoding of the actual values. The encoder for y is an exponential moving average (EMA) of the encoder for x.

All training happens in a self-supervised learning regime. After training, the encoder and predictor can be used to study the model's understanding of the world. When processing a video, the model encodes what it has observed and predicts future frames. The prediction error relative to the actual frames is recorded. This allows for experimenting with how many previous frames (memory) are used for such prediction and what the frame rate is (smoothness of movements).

Training Data and Model Architecture

In this work, V-JEPA is pretrained on a mixture of datasets called VideoMix2M, which includes:

Kinetics710 (650k videos of 10 seconds each)

SomethingSomething-v2 (200k clips of several seconds each)

HowTo100M (1.2M videos averaging 6.5 minutes each—equivalent to 15 years of video)

The encoders are Vision Transformers (ViT), taking as input 3 seconds of video in the form of 16 frames (5.33 fps) at a resolution of 224x224. The researchers experimented with ViT-B/L/H models. The predictor is also ViT-like, with 12 blocks and a dimension of 384.

Evaluating Intuitive Physics Capabilities

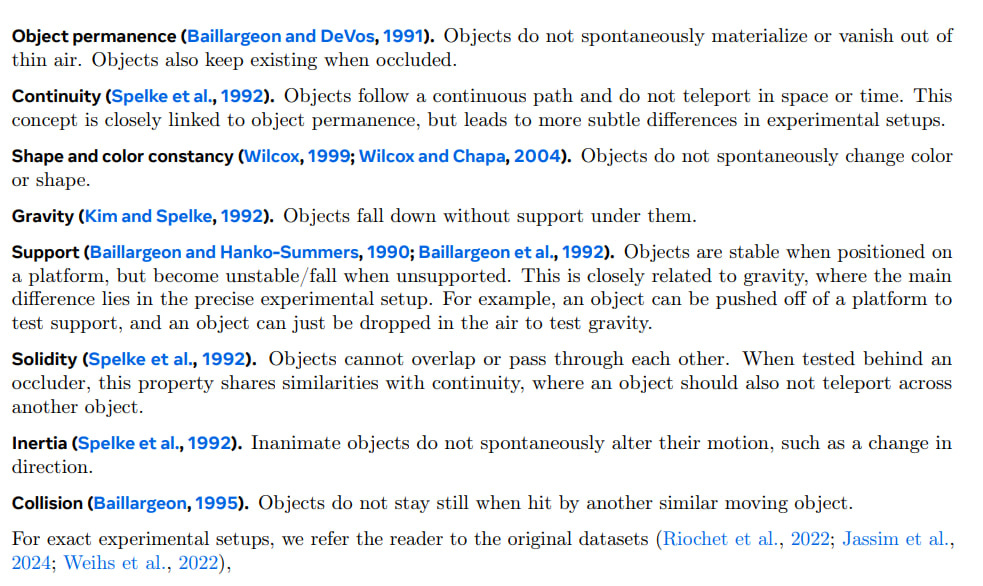

The model's intuitive physics abilities were evaluated on IntPhys, GRASP, and InfLevel-lab datasets. This combination tests object permanence, continuity, constancy of shape and color, gravity, support, solidity, inertia, and collisions.

V-JEPA was compared with other video models from two classes:

Pixel-space video prediction models (VideoMAEv2)

Multimodal LLMs (Qwen2-VL-7B, Gemini 1.5 Pro)

They also compared against untrained models (JEPA components with random initialization).

Results: Can AI Learn Physics by Watching Videos?

Performance was evaluated on pairwise classification, where the model had to identify the physically impossible video in a pair. The model's "surprise" (essentially L1 loss) was calculated, and based on its value, the impossible video was determined. V-JEPA consistently outperformed untrained models on all three datasets, demonstrating that prediction in a learnable space is sufficient for developing an understanding of intuitive physics. Notably, this was achieved without any predefined abstractions and without knowledge of benchmarks during pretraining or method development.

Interestingly, VideoMAEv2, Qwen2-VL-7B, and Gemini 1.5 Pro produced results not significantly better than randomly initialized networks. This doesn't mean they're incapable of learning such tasks, but it shows that the challenge is difficult even for frontier models (it would be interesting to see results from models of spring 2025).

The researchers examined results for individual capabilities using V-JEPA with a (not the largest) ViT-L architecture trained on HowTo100M. They performed statistical tests (two-sample one-tailed Welch's t-test) to evaluate performance relative to untrained models. They found statistically significant differences in many areas, but not all. For instance, color constancy, solidity, collisions, and (on one dataset) gravity were not statistically significant. However, object permanence, continuity, shape constancy, support, and inertia worked well.

There was also some comparison with humans from Mechanical Turk on a private IntPhys test set, using (a lerger) ViT-H pretrained on VideoMix2M. V-JEPA's performance was similar to or higher than human performance, though the details about the human participants and tasks weren't fully explained, we need to dive into the paper about the dataset.

The researchers found that when evaluating unique videos rather than comparing similar ones, using the maximum surprise works better than averaging surprise across all frames, as this eliminates the contribution of scene complexity.

They conducted ablations to study the impact of training data, model size, and pretraining prediction tasks:

Having different datasets focusing on various activities predictably affects performance. For example, when training only on the SSv2 movement dataset, shape constancy is learned rather poorly.

Larger models generally perform better, but the difference isn't particularly large given the confidence intervals.

For pretraining tasks, they tried three variants:

Block Masking: Masks a specific piece of the image in each frame

Causal Block Masking: In addition to masking part of the image, also masks the last 25% of the video

Random Masking: Masks random pixels in each frame

Surprisingly, the difference in performance wasn't very significant. The transition to Random Masking reduces quality in video classification by 20 points but only by 5 points on IntPhys. Causal Block Masking works worse than simple Block Masking, even though it should directly help with prediction (which is necessary for intuitive physics). This is interesting, suggesting that a specially selected objective for intuitive physics isn't particularly necessary.

Not all properties of intuitive physics are learned effectively, but this could be due to limitations in the datasets. It's also possible that interactions between objects require higher-level representations, and H-JEPA might help with this. Additionally, an agent might need to interactively engage with the world to learn interactions, as the current setting positions JEPA more as a passive observer without any ability to manipulate its environment. It would be interesting to see if someone has already integrated JEPA into something like Dreamer (now in Nature! TL;DR is here).

In any case, this is exciting progress that also helps us better understand JEPA and its potential applications!

See also: