Gödel Agent

A Self-Referential Agent Framework for Recursive Self-Improvement

Authors: Xunjian Yin, Xinyi Wang, Liangming Pan, Xiaojun Wan, William Yang Wang

Paper: https://arxiv.org/abs/2410.04444

Repo: https://github.com/Arvid-pku/Godel_Agent

Why did the Gödel Agent take so long to plan its road trip in its Gödel Machine?

Because its navigation system kept recursively self-improving, proving it could find a better route, but never actually getting to the destination!

This fascinating paper explores evolving agents inspired by Schmidhuber’s 2003 Gödel Machine (https://arxiv.org/abs/cs/0309048). The Gödel Machine tried to formally prove that a change would lead to an improvement (which could take practically forever), while this agent uses empirical feedback from its environment instead of formal proofs and asks a Large Language Model (LLM) to improve its code based on that feedback. It feels somewhat like AutoGPT, but with the added ability to rewrite its own code.

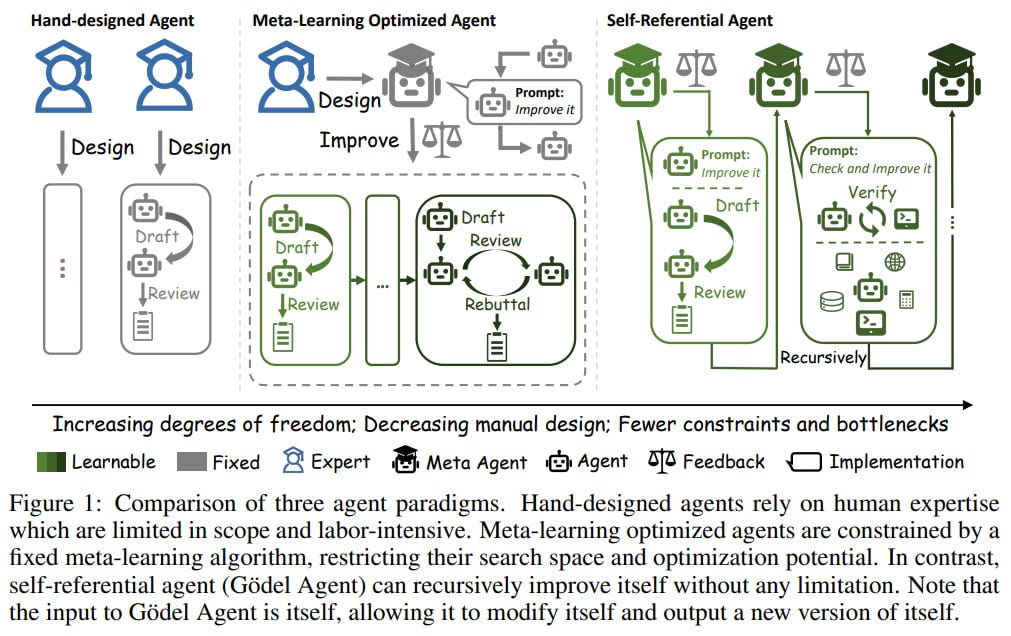

The core idea is that agents come in different types. The simplest, Hand-Designed Agents, have the lowest degree of freedom and follow the same policy all the time, regardless of feedback from the environment. Others, known as Meta-Learning Optimized Agents, have a fixed meta-learning algorithm that updates their policy based on environmental feedback. Then, there’s the self-referential Gödel Agent, which can improve itself without constraints. Technically, this means it can update both its policy and its meta-learning algorithm.

To set up such an agent, you need to define initial policies and a meta-learning algorithm. The starting policy uses an LLM with a Chain-of-Thought prompt, and the meta-learning algorithm recursively requests the LLM to rewrite its entire codebase based on feedback from the environment (task success rate). The agent can even rewrite the part of its code responsible for rewriting code, making it truly self-referential.

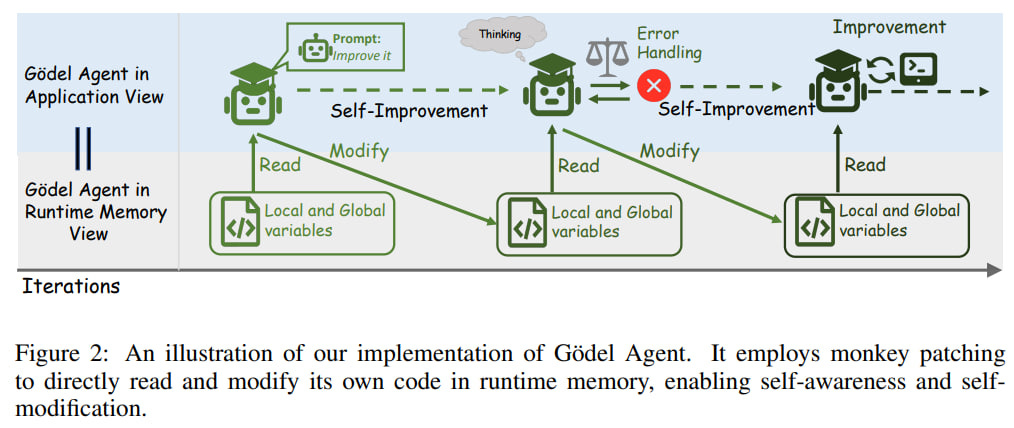

The agent is essentially an instance of a specific Python class (Agent). It has access to the Python environment’s memory (local and global variables, functions, and modules) and can dynamically modify everything, including its code, using monkey patching. This is how it evolves, as far as I understand.

To enhance the convergence of its optimization process, the agent is given additional tools: 1) thinking before acting (which seems similar to ReAct), 2) error handling (a recovery mechanism for when the LLM introduces bugs into the code), 3) code execution (Python and bash), and 4) LLM API calls. The first two tools tend to be the most beneficial.

Some online reviews suggest that the agent first checks if new changes lead to improvement and only implements new code if they do. It can also backtrack to a previous solution if the outcome worsens. However, this isn’t explicitly described in the paper. In fact, there are examples where the results initially got worse, and the agent eventually recovered. I only skimmed through the code, but it seems like the agent relies solely on its history. I could be wrong, though, so if anyone dives deeper and finds something interesting, let me know. It’s worth mentioning that an increasing number of reviews seem to be generated by NotebookLM or GPT, and they don’t always match reality. My own NotebookLM experiment is here, it also invented some new things absent in the paper. But this tool is still great! 🙂

The benchmarks tested include DROP, MGSM, MMLU, and GPQA. Baselines come from the group of Hand-Designed Agents (CoT, CoT-SC, Self-Refine, LLM Debate, Step-back-Abs, Quality-Diversity, Role Assignment) and Meta-Learning Optimized Agents (Meta Agent Search).

The default Gödel agent is restricted; it’s not allowed to change the model (gpt-3.5-turbo) and lacks internet access. From what I gathered, gpt-4o is used for self-improvement, while gpt-3.5-turbo is used to evaluate the optimized policy. There is also an unrestricted variant, which has access to everything.

The restricted Gödel agent outperformed all others, significantly in some cases (DROP, MGSM) and only slightly in others (GPQA). The appendix includes code for the discovered policies, making it possible to study how far it evolved from the initial CoT. The unrestricted agent performed even better, though often because it switched to a more powerful model.

The complete evolutionary process across four benchmarks with 30 recursive self-improvements costs $15 (mainly due to the growing memory needed for history tracking). In comparison, the competing Meta Agent Search required $300.

A separate experiment focused on the Game of 24. After six unsuccessful optimization attempts, the Gödel agent switched from an LLM-based method to a search-based method, rewriting that part of the code and achieving 100% accuracy. In other runs, it continued using the LLM but made various improvements, such as adding verification steps and testing tasks with additional data. It also added a library for better error tracing, improved logging, and removed unnecessary code.

The researchers also experimented with different initial policies, not just CoT. Interestingly, a stronger initial policy led to better convergence. However, the agent with CoT didn’t outperform ToT after all improvements, suggesting that it’s not the best at coming up with innovations.

The authors have big plans for improvements in various areas (see Section 6.1), including implementing multiple agents.

It’s an intriguing topic—there’s a lot you could evolve and recursively improve...