DolphinGemma

We'd like to know more...

Authors: Denise Herzing, Thad Starner

Blog: https://blog.google/technology/ai/dolphingemma/

Project website: https://www.wilddolphinproject.org/

Research paper: None available

Model: Not yet released (promised for this summer, apparently still in development)

Code: Not available

Talking to animals

I've been wanting to analyze DolphinGemma for a while now — a collaborative project between Google, Georgia Tech, and the Wild Dolphin Project (WDP) involving a model (LLM) trained on dolphin sounds.

Important note: Don't confuse this with Dolphin Gemma/Llama/Qwen/Mistral from the Dolphin project (https://huggingface.co/dphn, https://dphn.ai/) and Cognitive Computations. Those are a family of uncensored (https://erichartford.com/uncensored-models) conversational instruction-tuned assistants — just general-purpose text models.

This project has interesting parallels with the CETI project that studies whales, but it's not the same. There are also other fascinating animal-related projects. I particularly want to highlight the impressive Earth Species Project — worth examining separately - they already have their own bioacoustic model NatureLM-Audio (https://arxiv.org/abs/2411.07186) and other tools.

Toward understanding the communication in sperm whales

Authors: Jacob Andreas, Gašper Beguš, Michael M. Bronstein, Roee Diamant, Denley Delaney, Shane Gero, Shafi Goldwasser, David F. Gruber, Sarah de Haas, Peter Malkin, Nikolay Pavlov, Roger Payne, Giovanni Petri, Daniela Rus, Pratyusha Sharma, Dan Tchernov, Pernille Tønnesen, Antonio Torralba, Daniel Vogt, Robert J. Wood

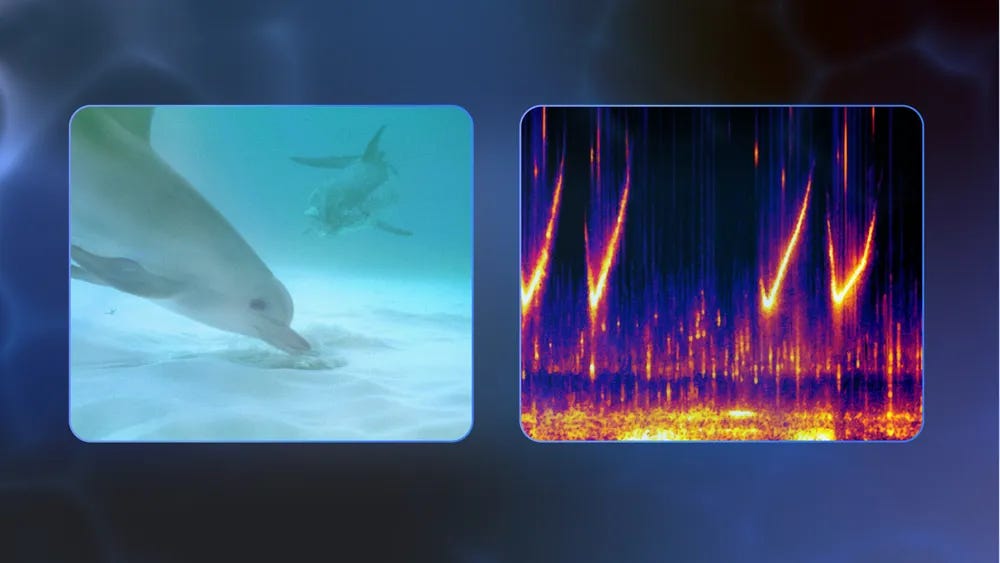

WDP has been studying dolphins since 1985, focusing on Atlantic spotted dolphins (Stenella frontalis) in the Bahamas region. The research is conducted in natural environments using non-invasive methods. Over the years, they've accumulated a dataset of underwater video and audio recordings, annotated with specific dolphin identities, their life histories, and observed behaviors.

From what I understand, the dataset contains not just sound recordings, but also contextual information about situations and behaviors of specific dolphins — for example, mother-calf reunions, fights, shark chasing, etc. The project's goal is to understand the structure of dolphin communication and, potentially, its meaning. There's more detail with audio examples available on the project website. I've heard that dolphins have other communication methods too, but let's not discuss that for now — we don't need those kinds of LLMs!

WDP also has a separate track for bidirectional communication — the CHAT system (Cetacean Hearing Augmentation Telemetry). CHAT can generate new synthetic sounds, different from natural ones, that can be associated with new objects that dolphins find appealing. There's hope that curious dolphins will learn these sounds if they want to request such objects from researchers, see video:

CHAT needs to work reliably (to hear the desired sounds in ocean noise) and quickly (so researchers with decoder devices can rapidly understand what dolphins want and provide it, thereby reinforcing the connection). This worked in real-time on an older Pixel 6, which is convenient — no need for special and expensive equipment.

Using DolphinGemma with its next-token prediction could theoretically accelerate the process of understanding what dolphins are trying to say and speed up communication.

What do we know about DolphinGemma?

Unfortunately, there are too few details about the work and practical results. From my perspective, this appears more like marketing material than a scientific paper (which doesn't exist yet). Project CETI and Earth Species Project are much more scientific (and open) in this regard. There's almost no information about DolphinGemma — mainly just blog posts and social media content. I couldn't find any papers, the model itself, or any code, which is disappointing. While I understand this might be early-stage research, I had hoped for more transparency. Let's try to analyze what is known.

The model's goal is to take dolphin vocalizations as input and generate new sound sequences that are hopefully dolphin-like.

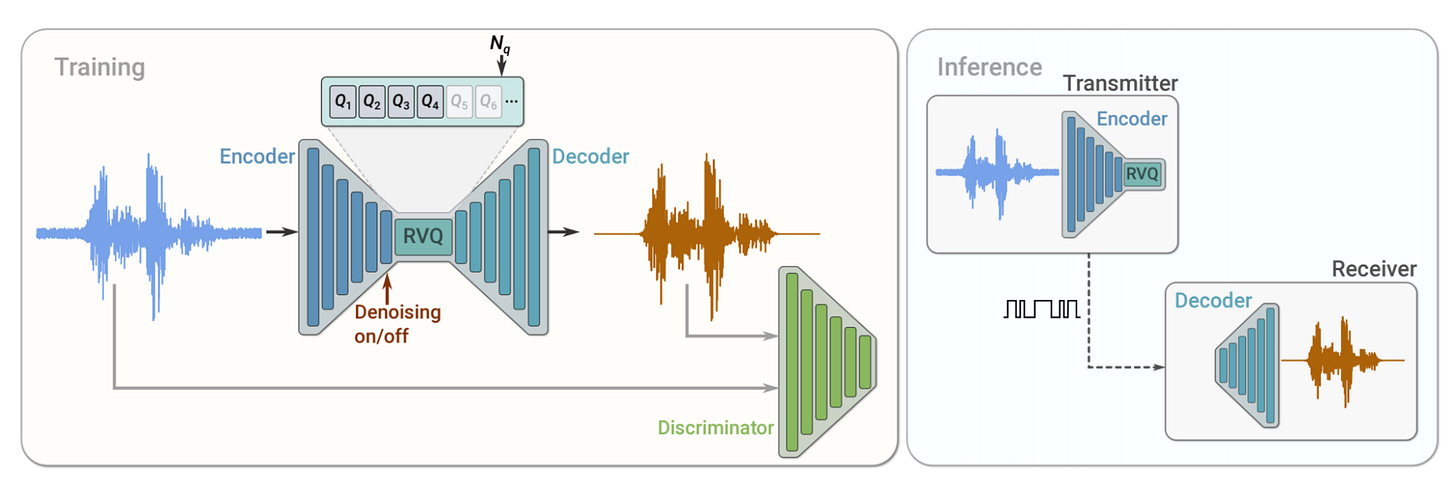

Audio-in, audio-out. But through tokenization using the SoundStream tokenizer (https://arxiv.org/abs/2107.03312, https://research.google/blog/soundstream-an-end-to-end-neural-audio-codec/) — Google's work from 2021. SoundStream is essentially a trainable end-to-end neural codec consisting of an encoder, decoder, and quantizer in the bottleneck between them.

During training, it uses two losses: reconstruction loss and adversarial loss, so that a discriminator cannot distinguish reconstructed sound from the original. After training, the encoder with quantizer can be used to generate tokens, and the decoder to restore them to sound. I'm not sure if Google published this codec; I don't see it readily available. But I do see various reimplementations online. Audio codec experts, please correct me. Also, is there something more modern and better? Surely something has appeared in four years.

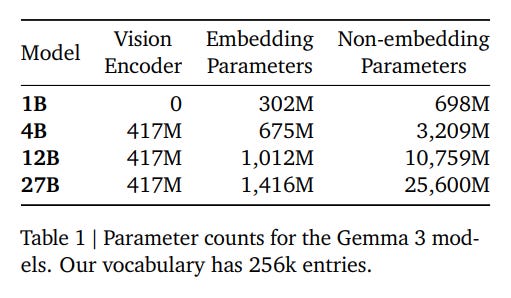

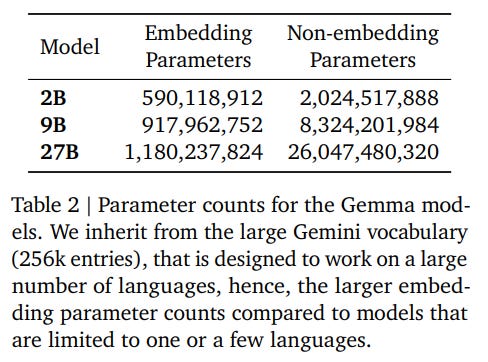

The model has 400M parameters, designed to run locally on Pixel phones used in the WDP project. No Gemma of this size exists, meaning this isn't a fine-tuned Gemma but a model built on its ideas (apparently, a transformer decoder) — your can read about Gemma architecture here (Gemma 2) and here (Gemma 3). In this sense, Google's communication was somewhat misleading when they said that the project uses Gemma models.

The dataset size is unclear. The paper "Imitation of Computer-Generated Sounds by Wild Atlantic Spotted Dolphins (Stenella frontalis)" about CHAT mentions 1319 minutes of audio recordings, but there’s no guarantee it’s the same dataset.

The practical output is also unclear. I managed to find a separate interview with the authors on a Scientific American podcast. There they claim that the model learned to generate certain vocalizations (VCM Type 3 or VCM3s) that dolphins prefer to use during bidirectional communication with humans, and for the authors this was something of an "aha moment." Before this, it seems generating VCM3s wasn't particularly successful.

That's about it. Apparently, it's still early-stage research. Though I had the impression things were somewhat different.

In general, regarding DolphinGemma specifically, we're waiting for more substantial announcements. Meanwhile, I would look more closely at more open projects like CETI (already did, but will continue) and Earth Species Project.

And really, it's high time someone trained BarkLLM. Or at the very least MeowLM. Should we organize something?