Deep Learning Legends: Ilya's List, or 90% of Everything That Matters in AI

The Legend

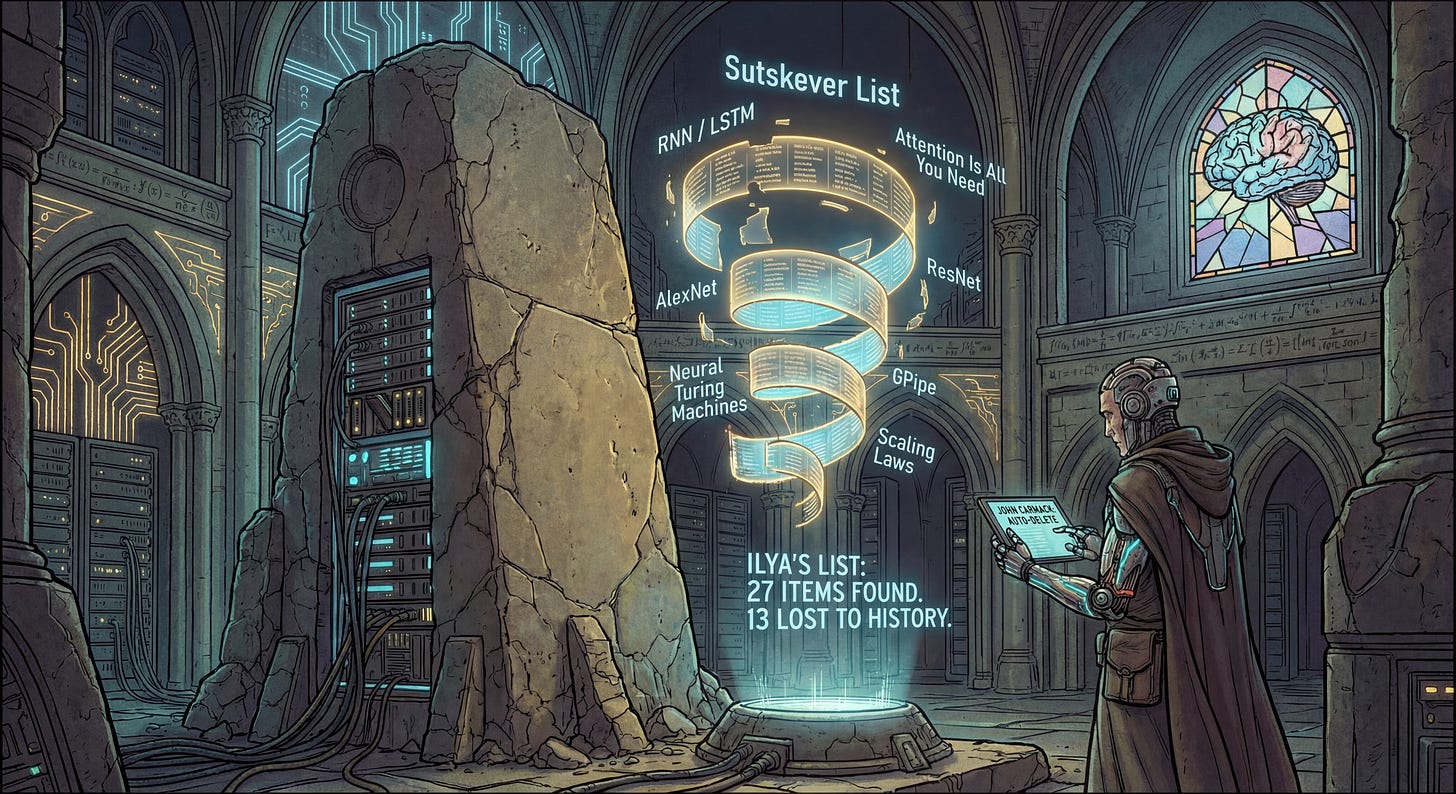

There’s a legend that once, John Carmack (creator of id Software, to whom we owe thanks for Wolfenstein, Doom, and Quake) asked Ilya Sutskever what he should read to master deep learning. And Sutskever gave him a list of forty papers, saying that by reading these, you’d learn 90% of everything that matters today.

“So I asked Ilya, their chief scientist, for a reading list. This is my path, my way of doing things: give me a stack of all the stuff I need to know to actually be relevant in this space. And he gave me a list of like 40 research papers and said, ‘If you really learn all of these, you’ll know 90% of what matters today.’ And I did. I plowed through all those things and it all started sorting out in my head.”

That time has passed, but the legend of the list lives on.

The problem is that the list has been lost to history — Carmack kept it somewhere with auto-deletion enabled (not sure exactly where, maybe in FB messages?). All he has left is a paper printout of part of the list. Neither Ilya nor OpenAI ever published the list.

“The email including them got lost to Meta’s two-year auto-delete policy by the time I went back to look for it last year. I have a binder with a lot of them printed out, but not all of them.”

BUT.

In January 2024, a reconstructed list of 27 items surfaced online — it was shared by an OpenAI researcher as the list from their onboarding, missing the section on meta-learning:

This is the closest version to the original available to the public. The remaining 13 items remain shrouded in mystery.

The List

Here’s that very “Sutskever List” (27 confirmed items, with links and my additions):

🔁 RNN / LSTM / Sequence Models

The Unreasonable Effectiveness of Recurrent Neural Networks (http://karpathy.github.io/2015/05/21/rnn-effectiveness/) — A. Karpathy (blog)

Understanding LSTM Networks (https://colah.github.io/posts/2015-08-Understanding-LSTMs/) — C. Olah (blog)

Both posts are must-reads if you want to understand recurrent networks. The second is like the Illustrated Transformer, but for RNNs.

Recurrent Neural Network Regularization (https://arxiv.org/abs/1409.2329) — Zaremba et al., 2014

Good, but I’d add a couple more interesting RNN works from the brilliant Graves (who will appear later with NTM):

Multi-Dimensional Recurrent Neural Networks (https://arxiv.org/abs/0705.2011) — Graves, Schmidhuber(!), 2007

Grid Long Short-Term Memory (https://arxiv.org/abs/1507.01526) — Kalchbrenner, Danihelka, Graves, 2015

Supervised Sequence Labelling with Recurrent Neural Networks (https://www.cs.toronto.edu/~graves/preprint.pdf) — Graves’ book on RNNs, also published by Springer in 2012, one of the best on advanced RNNs at the time

And from Sutskever himself, I’d add Neural GPU:

Neural GPUs Learn Algorithms (https://arxiv.org/abs/1511.08228) — Łukasz Kaiser, Ilya Sutskever, 2015. Once at NIPS 2016(?) in Barcelona, or maybe somewhere else, I approached Sutskever at the OpenAI booth, wanting to ask if he was continuing this cool work on learning algorithms, but all I could get from him was “No.” 😁

Next in the original list:

Pointer Networks (https://arxiv.org/abs/1506.03134) — Vinyals et al., 2015

Order Matters: Sequence to Sequence for Sets (https://arxiv.org/abs/1511.06391) — Vinyals et al., 2016

This is some cool exotic stuff, probably few have heard about Pointer Networks or Set2Set nowadays, but they were interesting works in their time. I’d add here, by the way:

HyperNetworks (https://arxiv.org/abs/1609.09106) — David Ha (now at Sakana!), 2016

Neural Turing Machines (https://arxiv.org/abs/1410.5401) — Graves et al., 2014

This is a worthy continuation of Graves’ work on RNNs, but this work also had its own important continuation — DNC:

Hybrid computing using a neural network with dynamic external memory (https://www.nature.com/articles/nature20101) — Graves, et al., 2016, blog

Relational Recurrent Neural Networks (https://arxiv.org/abs/1806.01822) — Santoro et al., 2018

Yes, also an interesting forgotten topic.

🎯 Attention / Transformers

Neural Machine Translation by Jointly Learning to Align and Translate (https://arxiv.org/abs/1409.0473) — Bahdanau et al., 2015

A must-read from Dzmitry Bahdanau, attention started roughly from here. Though you could say from NTM. Or even earlier.

Attention Is All You Need (https://arxiv.org/abs/1706.03762) — Vaswani et al., 2017

The Annotated Transformer (https://nlp.seas.harvard.edu/2018/04/03/attention.html) — S. Rush (blog)

I won’t be original here, the attention paper reads poorly, I personally like this post by Jay Alammar:

The Illustrated Transformer (https://jalammar.github.io/illustrated-transformer/)— Jay Alammar (blog)

Properly speaking, of course, we should now add:

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (https://arxiv.org/abs/1810.04805) — Devlin, 2018

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context (https://arxiv.org/abs/1901.02860) — Dai, 2019. This work started a large bunch of activities adding recurrency to transformers.

Universal Transformers (https://arxiv.org/abs/1807.03819) — Dehghani, 2019. An important gem frequently missing. See also my review here (BTW, that was Graves again who invented ACT).

Semi-supervised Sequence Learning (https://arxiv.org/abs/1511.01432) — Dai, 2015. The basis of LLM pretraining.

Papers on pre-GPT (my old review), GPT, GPT-2, and GPT-3 (my review) should also be here.

Of course there’s much more to add, but instead check out my talks on Transformer Zoo. Unfortunately, they are in Russian only (maybe not such a big problem with the current AI tools), but the slides are in English.

Transformer Zoo (2020), [slides]

Transformer Zoo (a deeper dive) (2020) [slides]

🧠 CNNs / Vision

ImageNet Classification with Deep Convolutional Neural Networks (https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf) — Krizhevsky et al., 2012 (AlexNet)

Deep Residual Learning for Image Recognition (https://arxiv.org/abs/1512.03385) — He et al., 2015 (ResNet)

Identity Mappings in Deep Residual Networks (https://arxiv.org/abs/1603.05027) — He et al., 2016 (more ResNet)

Classic stuff, yes. For historical justice I’d add:

Highway Networks (https://arxiv.org/abs/1505.00387) — Schmidhuber and co., 2015

Multi-Scale Context Aggregation by Dilated Convolutions (https://arxiv.org/abs/1511.07122) — Yu & Koltun, 2015

I’d add to this the work on separable convolutions from François Chollet:

Xception: Deep Learning with Depthwise Separable Convolutions (https://arxiv.org/abs/1610.02357) — Chollet, 2016

And also for vision, imho a must:

A Neural Algorithm of Artistic Style (https://arxiv.org/abs/1508.06576) by Leon Gatys, 2015. If this work hadn’t existed, there would be no Prisma and much else. And it’s beautiful inside, before it I somehow didn’t think that you could use other neural networks to get a loss.

CS231n: CNNs for Visual Recognition (http://cs231n.stanford.edu/) — Stanford course (2017)

Yes, a divine course. The later CS224N on NLP is also worth it:

CS224N: Natural Language Processing with Deep Learning (https://web.stanford.edu/class/cs224n/)

And of course we need to add Vision Transformer, ViT:

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (https://arxiv.org/abs/2010.11929) — Alexey Dosovitskiy and others (2020).

🧮 Theory, Descriptions, Training

Keeping Neural Networks Simple by Minimizing the Description Length of the Weights (https://www.cs.toronto.edu/~hinton/absps/colt93.pdf) — Hinton & van Camp, 1993

A Tutorial Introduction to the Minimum Description Length Principle (https://arxiv.org/abs/math/0406077) — Grünwald, 2004

Chapter 14 from the book Kolmogorov Complexity and Algorithmic Randomness (https://www.lirmm.fr/~ashen/kolmbook-eng-scan.pdf) — Shen, Uspensky, and Vereshchagin, 2017. The original Russian version is here (https://old.mccme.ru/free-books/shen/kolmbook.pdf)

The First Law of Complexodynamics (https://scottaaronson.blog/?p=762) — S. Aaronson (blog)

Quantifying the Rise and Fall of Complexity in Closed Systems: The Coffee Automaton (https://arxiv.org/abs/1405.6903) — Aaronson et al., 2016

Machine Super Intelligence (https://www.vetta.org/documents/Machine_Super_Intelligence.pdf) — Shane Legg’s (DeepMind co-founder) PhD Thesis, 2008

There’s nothing really to add here, a worthy list, I haven’t read everything myself yet.

🔬 Architecture / Scaling / Advanced Training

GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism (https://arxiv.org/abs/1811.06965) — Huang et al., 2019

Right now I’d add the classic work on MoE, for example, GShard and further:

GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding (https://arxiv.org/abs/2006.16668) — Dmitry Lepikhin, Noam Shazeer and others, 2020

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity (https://arxiv.org/abs/2101.03961) — Noam Shazeer and company, 2021.

And on distillation:

Distilling the Knowledge in a Neural Network (https://arxiv.org/abs/1503.02531) — Hinton, Oriol Vinyals and Jeff Dean, 2015.

Scaling Laws for Neural Language Models (https://arxiv.org/abs/2001.08361) — Kaplan et al., 2020

Yes, but now adding Chinchilla is mandatory:

Training Compute-Optimal Large Language Models (https://arxiv.org/abs/2203.15556) — Hoffmann et al., 2022.

A Simple Neural Network Module for Relational Reasoning (https://arxiv.org/abs/1706.01427) — Santoro et al., 2017

The second work in the list on relational learning, the first was in the RNN section.

Neural Message Passing for Quantum Chemistry (https://arxiv.org/abs/1704.01212) — Gilmer et al., 2017

Maybe something on modern GNNs is needed, but that’s a separate topic.

Variational Lossy Autoencoder (https://arxiv.org/abs/1611.02731) — X. Chen et al., 2017

Probably need the classics on VAE too:

Auto-Encoding Variational Bayes (https://arxiv.org/abs/1312.6114) — Kingma, Welling, 2013. And/or

An Introduction to Variational Autoencoders (https://arxiv.org/abs/1906.02691) — practically a book from them, 2019

Well and GANs should be here too, of course:

Generative Adversarial Networks (https://arxiv.org/abs/1406.2661) — Goodfellow and co., 2014.

From other classics I’d add:

Adam: A Method for Stochastic Optimization (https://arxiv.org/abs/1412.6980) — Kingma, Ba, 2014

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift (https://arxiv.org/abs/1502.03167) — Ioffe, Szegedy, 2015. Even though it doesn’t work the way it was intended 😉

🗣 Speech / Multimodality

Deep Speech 2: End-to-End Speech Recognition in English and Mandarin (https://arxiv.org/abs/1512.02595) — Amodei et al., 2016 — Look how fate turned out — Dario worked at Baidu, and now he's dealing with chip export restrictions 😄

This was so long ago that I don’t really remember this work anymore. But I think WaveNet should be added:

WaveNet: A Generative Model for Raw Audio (https://arxiv.org/abs/1609.03499) — DeepMind, 2016.

Another important thing for speech is the Connectionist Temporal Classification (CTC) loss invented by Alex Graves (again!), but it’s already covered in the RNN book mentioned above.

The missing part

What about the remaining 13 items? There are various assembled versions.

There’s not a single reinforcement learning paper in the list, which is strange — possibly they were in the lost part. Then it would definitely be worth adding a lot, but at minimum:

Playing Atari with Deep Reinforcement Learning (https://arxiv.org/abs/1312.5602) — DRL classic from DM, 2013

Mastering the game of Go with deep neural networks and tree search (https://www.nature.com/articles/nature16961) — classic AlphaGo, 2016

Mastering the game of Go without human knowledge (https://www.nature.com/articles/nature24270) — AlphaGo Zero, which learned without human demonstrations, 2017

Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm (https://arxiv.org/abs/1712.01815) — AlphaZero, which learned multiple different games without human demonstrations, 2017

For RL, I think a separate list is needed, I haven’t done that much work with it myself, but there’s definitely much more important stuff there.

There’s no meta-learning either. Surely there should have been something like MAML (https://arxiv.org/abs/1703.03400) and something else. But I haven’t worked much with that either.

I’d probably add a bit on evolutionary algorithms too, this also deserves a separate review, but at least the classic that’s been mentioned multiple times recently (some links here):

Evolution Strategies as a Scalable Alternative to Reinforcement Learning (https://arxiv.org/abs/1703.03864) — also with Sutskever’s participation, by the way. I wrote about this paper back then.

But these are all just guesses. In any case, even by modern standards the list is still pretty good.

I’ve started putting my reviews on Medium/Substack/etc noticeably after most of these papers came out, so you won’t find reviews of them here (but may find some of them in the older Russian-speaking Telegram channel). Read the originals! Back then they were shorter than now :)

And I’m going to go play Doom.