Introduction

The deep learning revolution is commonly attributed to three key pillars: data, compute, and algorithms (see, for example, “The Three Breakthroughs That Have Finally Unleashed AI on the World“ in WIRED 2014). These pillars are the foundational components that have transformed the field and made large-scale deep learning possible.

Data: The availability of large datasets like ImageNet has provided the fuel for training deep neural networks, enabling them to learn and generalize across vast and varied inputs. Small datasets of the past were not enough to learn useful features from raw data.

Algorithms: While backpropagation and many neural network architectures have been known for decades, feed-forward neural networks (FFN), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and later transformers have demonstrated their full potential by leveraging these large datasets.

Hardware: The rise of powerful GPUs has enabled faster, more cost-effective training of deep learning models. GPUs dramatically reduced the time required for training, as well as costs and energy requirements, which was previously a bottleneck when relying on CPU clusters.

However, these three pillars are not the complete story. A fourth pillar has been equally crucial in driving the deep learning revolution: deep learning frameworks.

Deep learning frameworks are software libraries that provide a high-level interface for building, training, and deploying neural networks. Without these frameworks, even with abundant data, innovative algorithms, and powerful hardware, the revolution might never have taken off, and deep learning might have remained an academic pursuit, limited to those with deep expertise in both mathematics and programming. Frameworks have democratized access to deep learning, simplifying model development and accelerating research and production alike.

This post explores how deep learning frameworks evolved and why they are vital to the success of deep learning.

Pre-Framework Era

From the 1980s to the early 2010s, building and training neural networks was an arduous and manual process. Researchers relied on languages like Matlab (and its open alternative Octave), or general-purpose programming environments like C++ with matrix libraries or Python with NumPy.

However, some libraries were already present. Neural network simulator SN (later renamed Lush) has been developed continuously since 1987 (a paper from 1988 in French), originally by Yann LeCun and Leon Bottou. I was too young to be interested in neural networks back then 🙂 Leon Bottou says that plenty of the ideas, like tensors, compilers with substantial code analysis, canned neural network modules, etc., later found in Torch and successors were first used in SN/Lush. By the way, a short story of SN→SN3→LUSH→Torch3,4,5,7→PyTorch can be found around this tweet. So, the eras I describe here are not truly chronological. Another surprising thing for me was that the DjVu project was also developed by Leon Bottou, Yann LeCun, Patrick Haffner, Paul Howard, Yoshua Bengio, and others.

The early deep learning and machine learning courses (remember the Andrew Ng’s ml-class of 2011 and Hinton’s deep learning course of 2012?) used Matlab and Octave. While this made pedagogical sense—implementing a neural network from scratch provides valuable insights into the underlying mechanics—these tools were far from ideal for deep learning research and development.

These tools were far from ideal for deep learning because they lacked the higher-level abstractions needed to build and train models efficiently. In those early days, even implementing something as fundamental as a simple MLP with backpropagation required manually defining all the weight matrices, the sequences of matrix operations to calculate activations, deriving formulas for derivatives by hand (doing manual differentiation), and coding the gradients for each neural network layer. This was not only time-consuming but also highly error-prone. For example, implementing a simple feedforward neural network (especially with a custom activation function) could take days or even weeks, with much of that time spent on debugging gradient calculations. You usually implemented numerical differentiation only to check that your calculations were correct (you cannot use numerical differentiation instead of backprop as it is too slow, so you can use it only during development to check the results of manual differentiation). This further slowed down research.

Proficiency with analysis was essential, and taking derivatives was an everyday job. It was challenging to iterate on new ideas or experiment with architectures. This limitation significantly hampered the speed of innovation and delayed breakthroughs in the field.

The First Deep Learning Frameworks: Tensors, Layers, Autodiff and GPUs

The introduction of dedicated deep learning frameworks marked a pivotal turning point. The earliest frameworks included:

For some, Matlab was the role model. For example, Lua-based Torch7 was named “A Matlab-like Environment for Maching Learning.” The famous Python-based Theano framework was rather a versatile lower-level mathematical library with support for tensor types, symbolically defined algorithms, and autodiff or automatic differentiation (not to be confused with the previously mentioned numerical differentiation). It looked to me, in some sense, like TensorFlow version 0.

Autodiff was a game-changer on its own. Many frameworks started to use autodiff to calculate derivatives and let users focus on writing the forward computation logic only. That helped a lot. The speed of development increased significantly, making iterations shorter. Now, you could test different modifications in minutes instead of hours or days, completely without taking derivatives by hand. I believe the autodiff was the biggest democratizer for the deep learning field itself. Other improvements made things faster (both development and training), but autodiff removed the requirement to have a PhD or be super-proficient with analysis and taking derivatives. It is interesting to note that autodiff can do much more, including taking derivatives of custom code with control flow logic, but this feature will only be available in later framework generations.

The first experiments with using GPUs for deep learning circa 2010-2011 showed a speedup of factors 10-60, which was a big deal. Since then, frameworks have started adding GPU support, so Theano added it in version 0.3 in 2011. The famous (but not the first to use GPU) AlexNet came out at about the same time in 2011 and used its own implementation with the cuda-convnet library. This changed the field a lot.

In the same way, Theano boosted the development of higher-level libraries with all the required neural network-related primitives like layers, activations, losses, and so on. Torch7 had all the batteries included with its own nn package for this (PyTorch went the same way), but Theano produced a whole set of solutions: PyLearn2 (2011), Blocks (2014), Lasagne (2014), to name a few. Some of them were even second-level derivatives; say, nolearn (2014) was built on top of Lasagne.

This gradually led to the next intermediary generation of frameworks (we may call it Generation 1.5), which were very diverse and different from each other. To name a few, I’d highlight Caffe (2013), Deeplearning4j (2014), MXNet (2015), CNTK (2016), and of course TensorFlow (2015) and Keras (2015).

These frameworks cover different languages. Deeplearning4j was the best-known framework for Java. Caffe was rather a domain-specific language (DSL) used to define a neural network from a set of predefined blocks. I myself used it in a project with a driver assistant tool to recognize road signs in real-time, which was supposed to run on old Android smartphones with much less power than contemporary phones. You may get from the idea to a trained neural network without writing any line of code in a programming language, only configuration files and shell scripts to orchestrate training. Only when you had to embed the trained neural network into production code you used C++ or Python bindings. However, Caffe still required manual differentiation to implement your custom layers with both forward and backward functions. MXNet used a C++ core and had bindings to different languages, allowing running in distributed heterogeneous environments.

The culmination of this era was the emergence of TensorFlow 1 and, subsequently, Keras 1.0.

TensorFlow used the concept of static computational graphs (as Theano did), which allowed for optimized execution on various hardware platforms. It also provided higher-level APIs and an XLA compiler for TensorFlow graphs and was production-ready from the start. TensorFlow also allowed it to train models on distributed systems, significantly reducing training time for large-scale models. For example, training a state-of-the-art image classification model on ImageNet could now be done in days or even hours rather than weeks or months.

Keras, on the other hand, focused on ease of use, providing a high-level API that sat on top of other frameworks (originally, it supported three backends: Theano, Tensorflow, and CNTK). This allowed researchers to prototype models quickly, sometimes reducing development time from days to hours.

To summarize, the frameworks of this era had the following features:

Tensor data structures: Handling the multidimensional arrays that neural networks use, thus simplifying the process of defining and manipulating model layers.

Layer primitives: Providing pre-built components for neural networks, allowing researchers to focus on the design of their architectures rather than implementation details.

Simpler work with derivatives: Automatically calculating gradients, eliminating the need for manual derivative calculation. For instance, with automatic differentiation (or autodiff), defining a neural network layer and computing its gradients could be done in just a few lines of code without the hassle of manually calculating derivatives in the pre-framework era.

Hardware acceleration: GPU has been an essential thing almost since the beginning.

Models are represented as static computation graphs that are compiled before use, which adds efficiency as compilation allows for optimized execution on various hardware platforms

Some of the frameworks were designed with scalability and production use cases in mind.

These frameworks automated many of the tedious and error-prone tasks and significantly simplified the development of neural networks. They accelerated the process of experimenting with new models and architectures, reducing the time to prototype and debug models from weeks to days or even hours. For example, implementing a convolutional neural network (CNN) for image classification, which could have taken months in the pre-framework era, could now be accomplished in a matter of days.

They made deep learning more accessible to a broader audience by introducing user-friendly APIs, which allowed engineers and researchers to build models faster and more efficiently.

Frameworks now also provide [at least some] support for distributed training and better GPU utilization, making large-scale deep learning more feasible.

The 2nd Generation: Dynamic Graphs and Eager Execution

A significant leap forward came with the advent of frameworks that supported dynamic computational graphs and eager execution, allowing more flexibility and real-time debugging during model development.

In deep learning, static computational graphs (like those in TensorFlow 1.x) are fixed and defined before model execution. This means that the structure of the graph, including operations and layers, is "frozen" during training, making it challenging to modify or adjust on the fly. Static graphs are optimized for performance, but they lack flexibility. Key drawbacks of static computational graphs include:

Limited Flexibility: The inability to change the graph structure dynamically can be a barrier when handling varying input sizes or recursive architectures, as each modification requires re-building the graph.

Debugging Challenges: Since the graph is predefined, debugging requires tracing through compiled graphs, which can be cumbersome and less intuitive.

Complexity in Code: Tasks that involve conditionals, loops, or dynamically changing layers are harder to implement because they don’t align well with a static graph's constraints.

Dynamic computational graphs, introduced in frameworks like PyTorch and TensorFlow 2.x, create the graph as code executes, making it much more adaptable and easier to modify. Benefits of dynamic computational graphs include:

Increased Flexibility: They allow for variable input sizes, adaptive model structures, and easier handling of recursive neural networks and conditional layers.

Ease of Debugging: Since the graph is generated at runtime, developers can use standard debugging tools and inspect layers, tensors, and operations as they execute, which makes finding issues simpler and more intuitive.

Clearer Code Structure: Writing models with dynamic graphs allows for using control flow operations (like loops and conditionals) directly, making code more readable and reducing boilerplate code.

This flexibility makes dynamic graphs particularly useful in research settings, where rapid iteration and model experimentation are essential.

Dynamic graphs allowed researchers to define models on the fly, making it easier to experiment with new architectures and modify existing ones during runtime. This was in contrast to the static graphs of earlier frameworks, where the entire computation had to be defined before execution. Autodiff was already here, and it had no problems with calculating derivatives of custom code with control flow logic.

The adoption of dynamic computational graphs in deep learning frameworks marked a significant shift towards greater flexibility and expressiveness, primarily driven by the need for adaptable model architectures. Here’s a look at the key frameworks and milestones that shaped this transition:

TensorFlow Fold (2017): Early Exploration of Dynamic Graphs

Before mainstream frameworks embraced dynamic graphs, TensorFlow Fold emerged as a TensorFlow add-on designed to handle variable-length and recursive data structures, such as trees or sequences, that traditional static graphs struggled with.

Fold allowed for dynamic batching, which could handle varying shapes and sizes of input data by dynamically constructing computation graphs. However, the limitations of TensorFlow's static architecture remained, as Fold was still built on top of TensorFlow’s static graph model. TensorFlow Fold solved the problem partially, allowing more efficiency for variable inputs, but it was still far from dynamic code execution with control flow operations.

Chainer (2015): Introducing “Define-by-Run”

Chainer, a deep learning framework from Japan's Preferred Networks, introduced the concept of “define-by-run” computation, where the computation graph is constructed on-the-fly as operations are executed, the network is defined dynamically via the actual forward computation. Most existing deep learning frameworks were based on the different “define-and-run” scheme back then, meaning first a network is defined and fixed, and then the user periodically feeds it with mini-batches of training data.

In Chainer, users could write their model code using Python control flows like loops and conditionals, with the framework constructing the computation graph dynamically as the code ran. Chainer stores the history of computation instead of programming logic. This approach offered immediate feedback and debugging advantages, paving the way for frameworks prioritizing dynamic graph computation. The Define-by-Run scheme was the core concept of Chainer.

DyNet (2017): Further Advancing Dynamic Graphs for NLP

Developed by researchers at Carnegie Mellon University, DyNet or The Dynamic Neural Network Toolkit (formerly known as cnn) was designed to efficiently handle dynamic graphs for complex NLP tasks like syntactic parsing, machine translation, morphological inflection. DyNet’s strength was its ability to rebuild the computational graph on each forward pass, making it highly suited for natural language tasks with varying sentence lengths and structures.

In DyNet’s dynamic declaration strategy, computation graph construction is mostly transparent, being implicitly constructed by executing procedural code that computes the network outputs, and the user is free to use different network structures for each input. The dynamic declaration thus facilitates the implementation of more complicated network architectures, and DyNet is specifically designed to allow users to implement their models in a way that is idiomatic in their preferred programming language (C++ or Python). One challenge with dynamic declaration is that because the symbolic computation graph is defined anew for every training example, its construction must have low overhead. To achieve this, DyNet has an optimized C++ backend and lightweight graph representation.

DyNet’s influence on the deep learning community reinforced the benefits of dynamic computation for handling irregular, variable-sized data, especially in fields where graph flexibility was crucial.

PyTorch (2017): Mainstream Adoption of Dynamic Graphs with Eager Execution

PyTorch, developed by Facebook’s AI Research lab, popularized dynamic computational graphs by combining Chainer’s define-by-run philosophy with a robust, accessible framework.

The groundwork for PyTorch originally started in early 2016, online, among a band of Torch7's contributors. Essentially, the team started writing a new Python-based Torch (instead of the previous Lua-based Torch). Chainer heavily influenced PyTorch’s design.

PyTorch introduced eager execution as its default mode. Eager execution is a mode where operations are evaluated immediately as they are called, rather than creating a computation graph that runs later. This approach aligns with Python’s natural, imperative style, where each line of code is executed sequentially.

Eager execution was a game-changer because it enabled a more Pythonic and interactive approach to model development. Developers could see each step of the computation immediately, simplifying debugging and enabling quick experimentation with flexible architectures. PyTorch’s ease of use and dynamic nature quickly gained popularity, especially in research, and its widespread adoption led to a strong developer community and extensive libraries.

TensorFlow 2 (2019): Adopting Eager Execution and Dynamic Graphs

TensorFlow 1 was pretty hard to use, and many researchers didn’t like it. PyTorch was a breath of fresh air for them. Recognizing PyTorch’s popularity, Google re-architected TensorFlow 2 to incorporate dynamic computation, bringing eager execution as the default mode. In eager execution, the TensorFlow 2 graph is defined and executed step-by-step, similar to PyTorch, providing immediate feedback and allowing for easier debugging and experimentation.

TensorFlow 2 still retained its static graph capabilities through tf.function, which could convert Python functions into TensorFlow’s original static graph structure when needed. This allowed for optimizations in performance-sensitive applications while maintaining the flexibility of dynamic computation.

With TensorFlow 2 came Keras 2.0, the default high-level API for TensorFlow. Back then, Keras supported two backends, TensorFlow and Theano, but Theano was officially discontinued in September 2017.

Dynamic computational graphs and eager execution have made deep learning frameworks more accessible, flexible, and interactive. This is especially beneficial for research and rapid prototyping. It has also improved the speed of iterations in deep learning even further compared to the pre-framework era.

Static graphs, however, still offer performance benefits in production settings, where computation graphs can be optimized and reused. This has led many frameworks to provide a hybrid approach, as seen in TensorFlow 2’s combination of eager execution and tf.function. This evolution, influenced by frameworks like Chainer, DyNet, and PyTorch, has ultimately empowered developers with a range of options, balancing flexibility with performance.

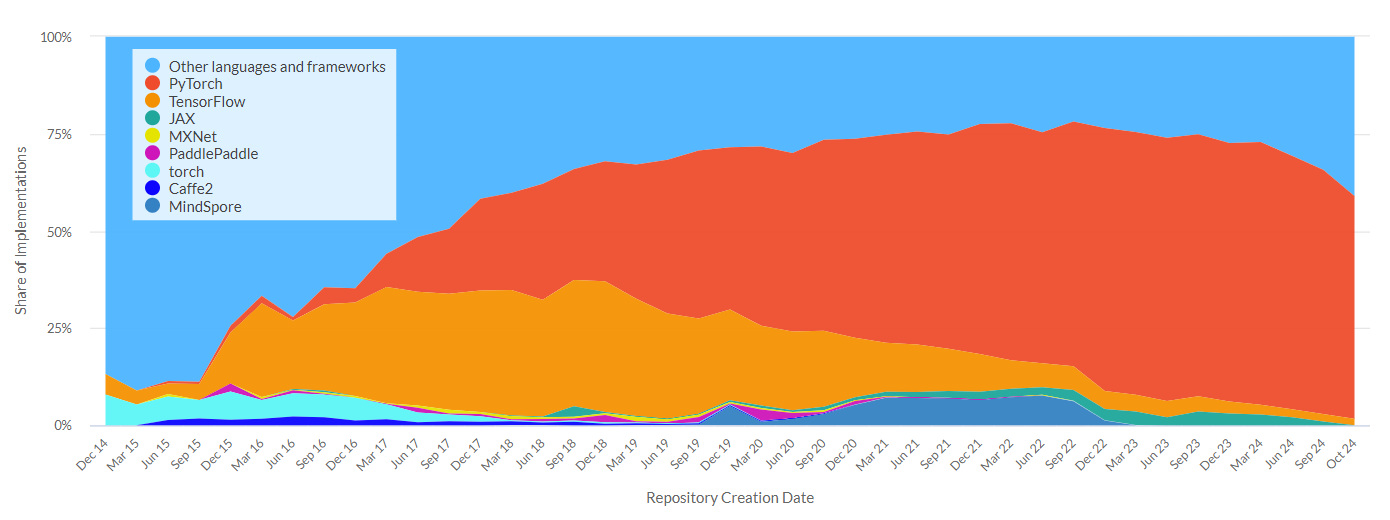

At this moment, PyTorch, with its Pythonic API and eager execution, quickly became a favorite among researchers for its intuitiveness and ease of use, setting a new standard in deep learning development.

The Latest Generation: Flexibility and Performance Optimization

Today's cutting-edge frameworks are focused on pushing the boundaries of flexibility, scalability, and performance optimization. These frameworks have opened up new possibilities for research and deployment:

PyTorch 2 (2023): With its new compiler and enhanced performance features, PyTorch 2 is optimized for large-scale production environments and high-performance applications. It introduces features like TorchDynamo for automatic optimization of PyTorch code, potentially yielding significant speed improvements without requiring changes to user code. PyTorch 2 is positioning itself as both a research-friendly framework and a performant option for production, striking a balance between flexibility and efficiency.

JAX (2018): JAX, developed by Google, is based on functional programming principles. It takes a unique approach, offering composable function transformations, just-in-time (JIT) compilation, and automatic differentiation for both Python code and NumPy operations. This makes it ideal for scientific computing, high-performance research, and deep learning. JAX's ability to easily parallelize computations across multiple GPUs or TPUs has made it particularly popular for large-scale machine learning research.

Keras 3 (2023): The latest release builds upon its legacy by offering tighter integration with TensorFlow 2 and other modern frameworks, while further improving user-friendliness. Keras 3 introduces a multi-backend system (again!), allowing users to switch between TensorFlow, JAX, and PyTorch backends without changing their model code. This flexibility makes it a top choice for rapid prototyping and deployment, keeping pace with the evolving demands of modern AI projects.

(to not be locked completely inside the Western bubble) MindSpore (2020), developed by Huawei, is designed for both cloud and edge deployments. It has native support for Huawei's Ascend AI processor and software-hardware co-optimization.

These frameworks continue to push the boundaries of what's possible in deep learning. For instance, OpenAI standardizes PyTorch as its main framework and likely uses it (or maybe a customized version) to train its LLMs. At the same time, JAX's parallelization capabilities have been crucial in developing large-scale LLMs inside Google, namely the Gemini and Gemma families.

JAX continues the beautiful tradition of calculating derivatives of your functions, adding the possibility of calculating gradients even for custom Python code with control logic. It was not the first framework with such a capability. Its predecessor, the Autograd library, emerged long ago with the idea that there should be a third way of calculating gradients, different from 1) the manual derivation or 2) using autodiff, but living with syntactic and semantic constraints of a system like Theano or TensorFlow. With Autograd, and later JAX, you still use autodiff, but now you just write down the loss function using a standard numerical library like Numpy, and Autograd will give you its gradient.

JAX is a framework that embraces the functional programming paradigm (which is a rarity on its own in the deep learning world). JAX gives you much more flexibility because it’s not just calculating gradients for you, but it transforms functions into other functions that calculate gradients of the original function. You may not stop here and generate a similar function for higher-order differentiation. This is particularly important in scientific areas other than deep learning, which explains why JAX is also popular outside of deep learning.

Functional programming was resurrected in software engineering some time ago, and more and more people love and use it (see “Why Functional Programming Should Be the Future of Software Development” in IEEE Spectrum). The time has come for the deep learning field as well.

There is also influence across frameworks. For example, several use cases are tricky to do in PyTorch today, like computing per-sample-gradients, running ensembles of models on a single machine, efficiently computing Jacobians and Hessians, and so on. So, for quite some time, PyTorch has experiments with JAX-like composable function transformations to overcome these limitations. Initially, it was done inside the functorch library; then, it moved to torch.func, but is still in beta.

Since deep learning grew to really large models trained on huge clusters with thousands of accelerators, many modern frameworks represent the convergence of flexibility, ease of use, and performance optimization.

This latest wave of frameworks reflects the diversity of AI applications today, from large-scale scientific research to low-power edge devices, signaling a future where deep learning frameworks will be adaptable across a broad range of hardware and computational environments. But this is definitely not the end of the story.

Now, let’s summarize the main points of my original message on the importance of frameworks.

The Benefits of Modern Deep Learning Frameworks

The evolution of deep learning frameworks has had profound effects on the field, offering several key benefits:

Efficient Hardware Utilization: These frameworks are optimized for the latest hardware, ensuring that models can fully take advantage of GPUs, TPUs, and other accelerators. This leads to faster training times and better performance. For instance, training a large language model (not the really large one like GPT-4, but rather smaller like BERT) that might have taken weeks and months on CPUs can now be accomplished in days or even hours on modern GPUs or TPUs with optimized frameworks.

Scaling and distributed computations: Many modern models are so large that they can only be trained in a distributed fashion. Modern frameworks make this process much easier; the only big problem here is the enormous costs of hardware.

Faster Time to Market: Frameworks provide pre-built components (and ecosystems, especially in the case of JAX) and simplify the process of building models, reducing the time required for experimentation and production deployment. For example, what once took months to develop can now often be prototyped in days or even hours.

Error-Proof Code: Features like autograd eliminate the need for manual differentiation, reducing the likelihood of errors in gradient calculations. This has dramatically improved the reliability and reproducibility of deep learning research.

Accessibility: Modern frameworks have made deep learning more accessible to non-experts. Researchers and developers from various fields can now implement complex models without needing a deep understanding of the underlying mathematics or hardware optimizations.

The first two points relate to the original pillars of the deep learning revolution, but the last three are completely missed. They all concern developer experience, which is an important undervalued pillar on its own.

It is also worth noting that deep learning frameworks have not evolved in isolation. There has been a co-evolution of frameworks, hardware, and algorithms:

Framework-driven Hardware Development: Frameworks have driven the demand for more powerful and specialized hardware. For example, the need for efficient tensor operations in frameworks like TensorFlow led to the development of Google's Tensor Processing Units (TPUs), which are custom-built for deep learning workloads.

Hardware-driven Framework Optimizations: As new hardware becomes available, frameworks are updated to fully take advantage of these capabilities. The introduction of NVIDIA's Tensor Cores, for instance, led to framework optimizations that could leverage these specialized computing units for faster matrix multiplications. The same is relevant for Google’s TPUs.

Algorithm Innovation: Frameworks have enabled faster iteration on new algorithms, accelerating the pace of innovation in neural network architectures. The ease of implementing and testing new ideas has led to breakthroughs like the transformer architecture, which was quickly adopted and improved upon due to its implementation in popular frameworks.

Scaling to Larger Models: The interplay between more efficient frameworks and more powerful hardware has enabled the training of increasingly large and complex models. This has led to the era of "foundation models" like GPT-3, which would not have been feasible without these advancements.

Conclusion

Deep learning frameworks are indeed the unsung heroes of the AI revolution. While data, compute, and algorithms provide the necessary foundation, frameworks have been essential in democratizing access to deep learning and accelerating the pace of innovation. They have transformed deep learning from a niche field accessible only to a few experts into a technology that can be leveraged by researchers and developers across various domains.

As frameworks continue to evolve, they will remain at the core of deep learning's future, driving the next wave of breakthroughs in artificial intelligence. The ongoing co-evolution of frameworks with hardware and algorithms promises to unlock even more potential, pushing the boundaries of what's possible in AI and machine learning.

The journey of deep learning frameworks from basic libraries to sophisticated ecosystems mirrors the explosive growth of AI itself. As we look to the future, it's clear that the continued development of these frameworks will play a crucial role in shaping the next generation of AI technologies, making them more powerful, accessible, and impactful than ever before.